Not only do submarines not have screen doors, but they don’t have windows or external lights at all. Submarines primarily use a combination of sonar and radar to navigate, but in high-stealth situations, submarines are forced to travel blind, using nothing but measurements and maps to estimate their position.

Piloting a submarine requires an intimate knowledge of the available data and confidence in that data’s health – piloting a business is not much different. A data observability platform helps organizations monitor their data health and address issues as they arise.

Data observability is an important discipline for businesses, but choosing the right data observability platform is equally important. This guide will explain the fundamentals of data observability platforms, how they can help your organization, and what qualities you should look for in choosing your data observability platform.

Key Takeaways:

- Data observability is a company’s ability to monitor the health of its data.

- A data observability platform performs many automated functions built on data monitoring.

- FirstEigen’s data observability and validation tools can help keep your data safe.

Defining Data Observability

Data visualization is a graphical representation of data. Data observability, though similarly named, is a very different concept:

Data observability is a blanket term for an organization’s ability to understand, diagnose, and manage data health.

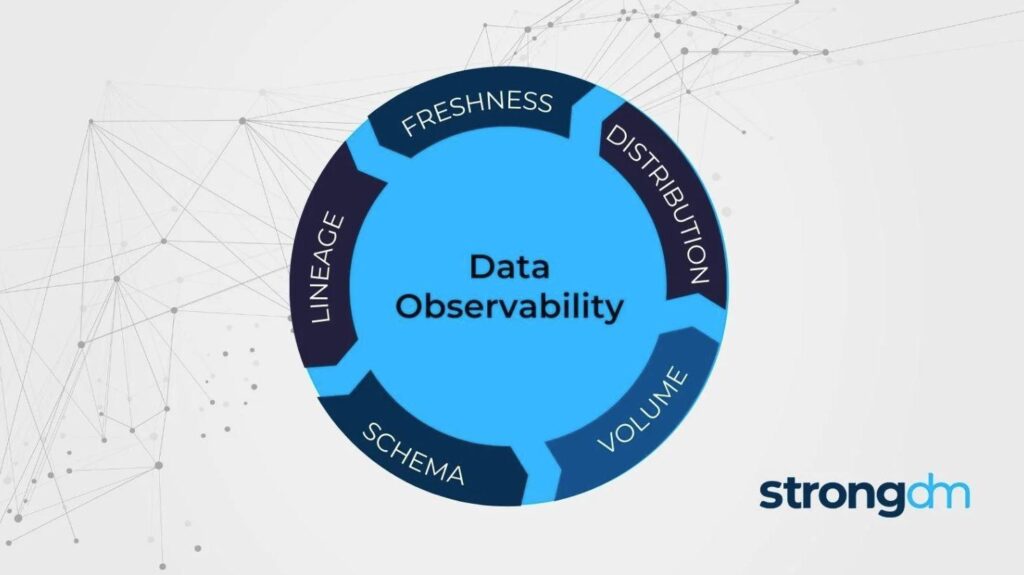

This leads to a nesting doll of definitions – to understand data observability, you have to define data health. Data health includes five core concepts:

- Freshness measures how up to date your data is. “Stale” data is out of date, while data you regularly update is “fresh.”

- Distribution sets parameters for your expected results. For example, if you expect a data field to fall between 1 and 10, a value of 800 would trigger a red flag to indicate that your data may be unreliable.

- Volume is the quantitative counterpart to distribution. While distribution is a parameter for value range, volume is a parameter for the number of records you expect.

- Schema describes changes to the “blueprint” of your data that can indicate inconsistent structure.

- Lineage flows both upstream and downstream – upstream to the sources of data and downstream to end users of data. Lineage monitors for irregularities in your data usage and sourcing.

What Does a Data Observability Platform Do?

Knowledge is power, but only if you know how to use it.

A data observability platform accomplishes several tasks to promote your organization’s data health:

- Monitoring is a high-level view of your data ecosystem.

- Alerting makes users aware of anomalies and data health risks.

- Tracking records specific events and metrics over time.

- Comparison contrasts data fields externally to each other and internally to their own values over time.

- Analysis detects and diagnoses data health issues.

- Logging records events in a standardized form for future reference.

- SLA (Service-Level Agreement) Monitoring tracks and analyzes metrics related to software and services.

Of these key functions, most people associate data observability with the monitoring function because it’s the first step toward data observability. However, monitoring simply detects a predefined set of failure modes, whereas the whole picture of data observability helps users not only find but also diagnose and track any issues.

Why You Need a Data Observability Platform

While users still have the option to manually monitor their own data, a data observability platform can do several things that human users simply cannot.

One important reason companies use data observability platforms is that the quantity of data businesses use is dramatically increasing every year, yet many businesses are not getting the full value out of their precious data. Corporate giants like Google, Meta, and Amazon are successful in part because they focus on data observability.

While most consumers know that Google and Meta make revenue primarily through advertising, you may be surprised to hear that Amazon makes more money from its advertising data than it does from Prime, Prime Video, Audible, and its other music and audiobook subscriptions combined.

This data is the lifeblood of modern business, but as data volume continues to rise year after year, businesses have to use new tools to adapt.

A data observability platform is capable of monitoring a larger amount of data than human users can, and artificial intelligence tools and machine learning excel at finding hidden patterns in large sets of data.

Simply put, a data observability platform multiplies the productivity of human users by creating actionable insights rather than a focus on raw numbers alone.

Choosing a Data Observability Platform

Data observability is an essential attribute of any business that uses data (which in today’s digital-first landscape includes most businesses). However, not all data observability platforms are equally useful to your organization. Look out for these qualities when choosing a platform:

Autonomous

Just like a Roomba or a self-driving car, an autonomous data observability platform still requires some human oversight. However, autonomous technologies are, by definition, able to respond to stimuli without human help. This is important in a data observability platform because it allows your organization to detect irregularities early and often – responding to alerts near-immediately.

Compatible

Your data observability platform has to be compatible with your databases, data lakes, and cloud storage solutions. Ideally, your data observability platform should be “plug and play,” meaning it works from day one with little to no rule-writing.

Sophisticated

Functionally, an air fryer and an Easy-Bake Oven are the same things. They are similarly sized and contain similar heating elements, but that’s where the comparisons end: the Easy Bake Oven is a low-temperature, low-tech toy oven with limited use cases, while an air fryer can include sophisticated automation, attachments for cooking everything from French fries to rotisserie chicken, and all the functionality an adult would expect from a cooking appliance.

Similarly, while many data observability platforms perform a similar process on paper, there is a world of difference between platforms. The best data observability platforms use AI (Artificial Intelligence) and ML (Machine Learning) to find hard-to-detect errors.

Timely

When is the best time to find an error – a week late or a month late? Those options aren’t good enough for your business. Your data observability platform should constantly be monitoring data health from the moment you add data to your ecosystem to the end of its lifecycle.

Finding errors early stops issues before they snowball out of control. Fixing an error downstream can be 10x as expensive as fixing it upstream, and the best data observability platforms should help you catch errors as early as possible.

FirstEigen DataBuck Observability

Autonomous, compatible, sophisticated, and timely – these are the qualities you should look for in a data observability platform, and they’re also the qualities of FirstEigen’s DataBuck observability tool.

DataBuck monitors for changes in value distribution, record volume, data freshness, and changes in file structure so you can rest assured that your valuable data is healthy and reliable.

Without the right platform, companies are only able to monitor a fraction of their data. With FirstEigen, you can monitor 100% of your data, keeping your information safer and saving as much as 80% of your data validation labor.

Ready to take control of your data? Learn more about FirstEigen’s data validation and observability tools today.

Check out these articles on Data Trustability, Observability, and Data Quality.

- 6 Key Data Quality Metrics You Should Be Tracking

- How to Scale Your Data Quality Operations with AI and ML

- 12 Things You Can Do to Improve Data Quality

- How to Ensure Data Integrity During Cloud Migrations

Elevate Your Organization’s Data Quality with DataBuck by FirstEigen

DataBuck enables autonomous data quality validation, catching 100% of systems risks and minimizing the need for manual intervention. With 1000s of validation checks powered by AI/ML, DataBuck allows businesses to validate entire databases and schemas in minutes rather than hours or days.

To learn more about DataBuck and schedule a demo, contact FirstEigen today.