Autonomous Data-Trust Monitor with ML

Upstream Data Trust Monitor minimizes unknown errors downstream

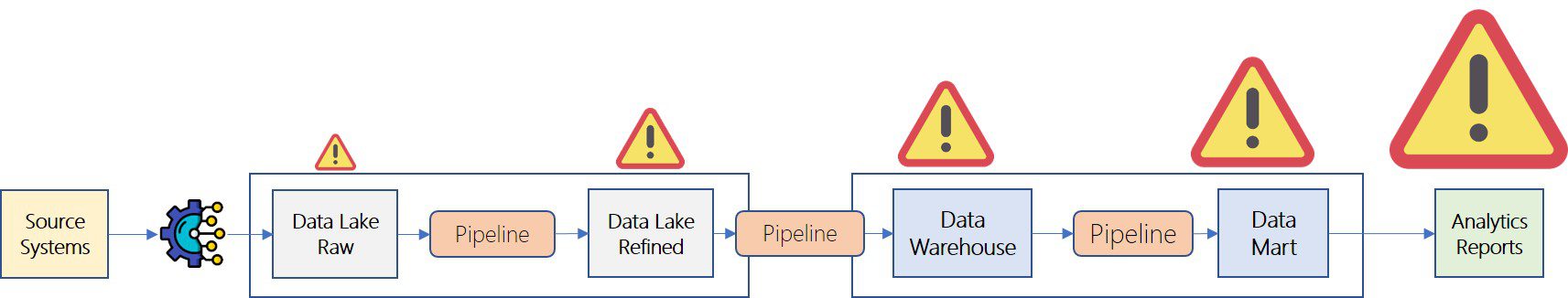

Data errors get amplified as it flows downstream through the data pipeline

In spite of investing in DQ and Observability tools, due to a lack of trust in data:

- 40% failure of data initiatives

- 20% drop in labor productivity

What is Trustability?

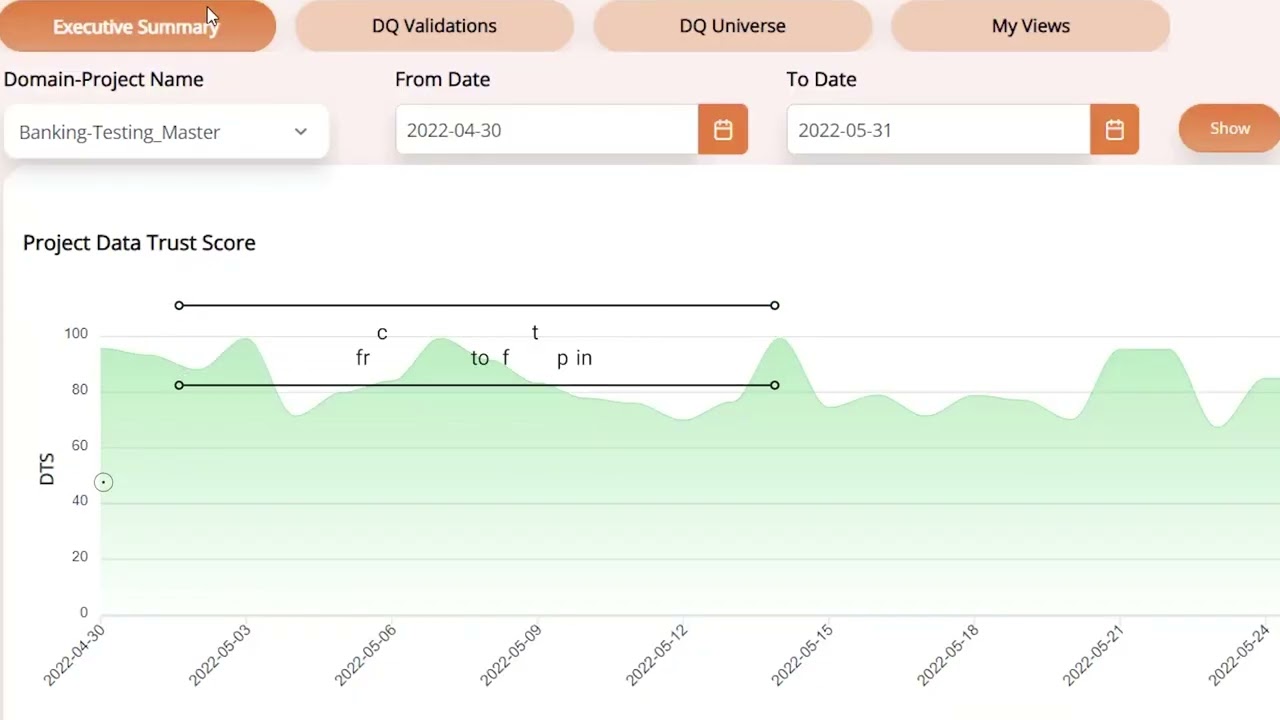

Data Trustability is sought by Catalog teams and Data Management teams.

- Data Profile

- Objective Data Trust Score (DTS) for every DQ dimension with AI/ML

- Aggregate DTS

Trustability throughout the Data Pipeline

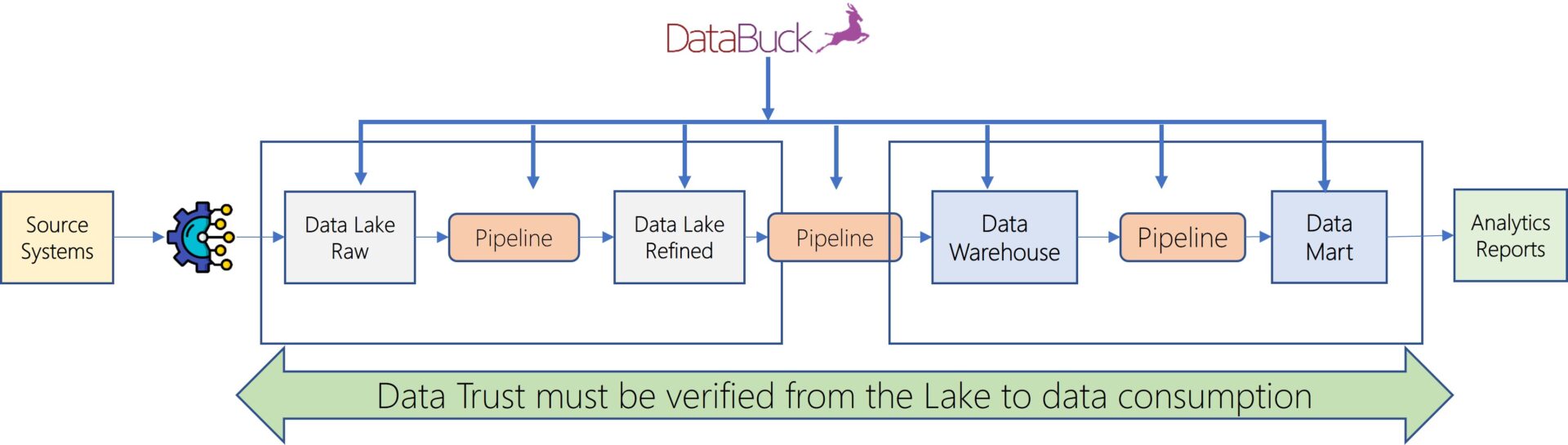

- Data fingerprint

- Self-learning

- Dynamically evolves

- Known-known errors

- Unknown-unknowns

- Objective Data Trust Score

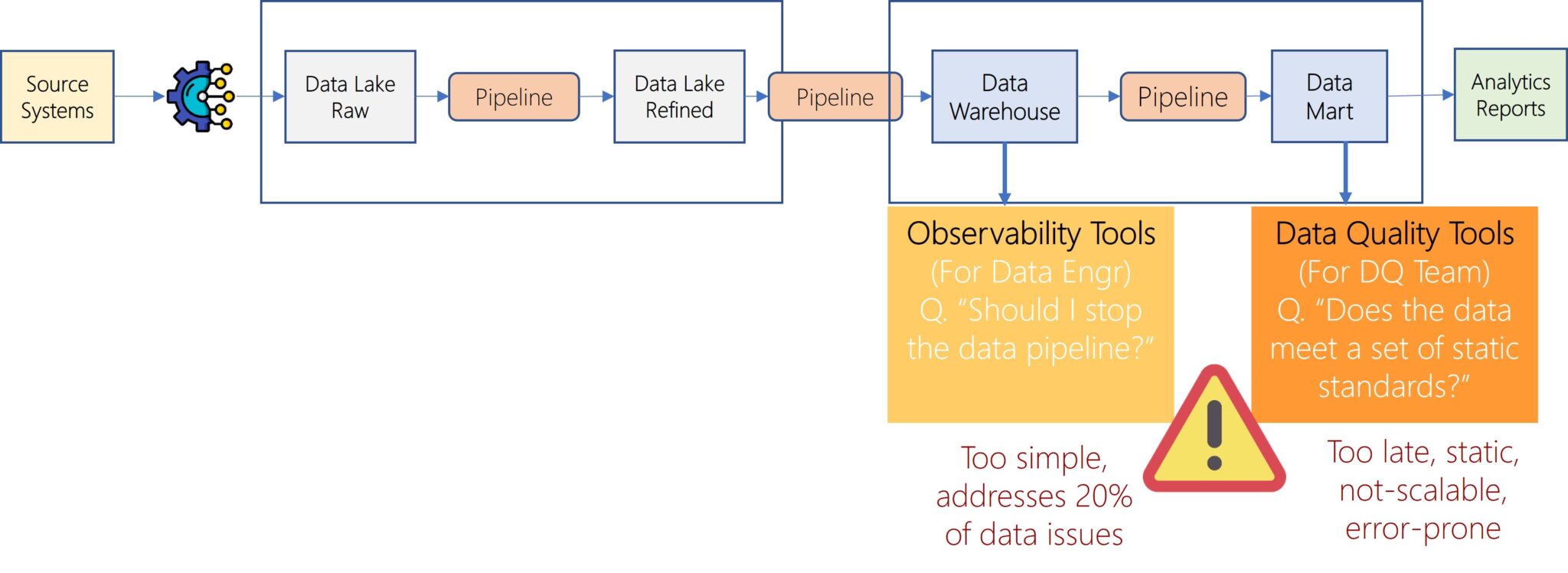

Challenge with existing non-ML tools to determine Trustability Challenges with Traditional Approach

Knowledge Gap

Many times, data quality analysts are unfamiliar with the data assets obtained from a third party, either in a public or private context. They need to engage with subject matter experts extensively in order to build data quality criteria.

In a Snowflake Data Cloud, as organizations share datasets with each other, data quality analysts may not have access to subject matter experts from another organization.

Processing Time

Time to Use the Dataset: Even if you are intimately familiar with the dataset, it can take between 2 to 5 business days to analyze the data quality.

Snowflake Data Cloud reduces the data exchange time drastically. However, adding additional days to manually perform the data quality adds to the timeline and defeats the purpose.

Why is it Important to Use a Machine Learning based Approach

Machine Learning is known for solving complex problems and executing results faster than intended without any human error.

Using ML in Snowflake Data cloud has some advantages:

- Machine Learning helps to objectively determine data patterns or data fingerprints, and translate those patterns to data quality rules.

- Machine Learning can then use the data fingerprints to detect transactions that do not adhere to the rules.

- Implementing an ML approach can help to quickly assess the data health check

ML is usually more comprehensive and accurate than a human-driven data quality analysis.

Powered by ML, DataBuck continuously monitors Data Trustability across the entire data pipeline. It validates Trust from the Data Lake to Data Consumption (L2C)

Platforms Supported by DataBuck

Data Lake

AWS

AZURE

GCP

Data Warehouse

Snowflake

Redshift

Biqquery

Cosmos

Postgres

Data Pipeline

Glue

Airflow

DataBricks

DataFlow

Autonomous Data Trust Score With DataBuck

See how DataBuck Leverages AI/ML for Superior Data Quality

Understand Data Trustability Read our blogs

What Data Sources Can DataBuck Work With:

DataBuck can accept data from all major data sources, including Hadoop, Cloudera, Hortonworks, MapR, HBase, Hive, MongoDB, Cassandra, Datastax, HP Vertica, Teradata, Oracle, MySQL, MS SQL, SAP, Amazon AWS, MS Azure, and more.