How well does your organization integrate data from multiple sources? Effective data integration is critical to turning raw data into actionable insights. You need a data integration solution that takes data disparate, often incompatible sources, monitors its data quality, and stores that data in an easily accessible format that everyone in your organization can use. Read on to learn more about the challenges, best practices, and available tools for creating a state-of-the-art data integration solution.

Quick Takeaways

- Data integration consolidates data from multiple sources into a single unified view

- The major challenges to effective data integration include ensuring data quality, dealing with system bottlenecks, ingesting data in real-time, and ensuring a high level of data security

- Best practices for data integration include defining clear goals, understanding the data being integrated, employing robust data quality management, and focusing on data security

Understanding Data Integration

MicroStrategy Incorporated reports that 59% of all organizations worldwide use big data analytics. This requires the ingestion of large volumes of data from multiple, often disparate sources, including internal and external databases, CRM systems, cloud services, social media, and more.

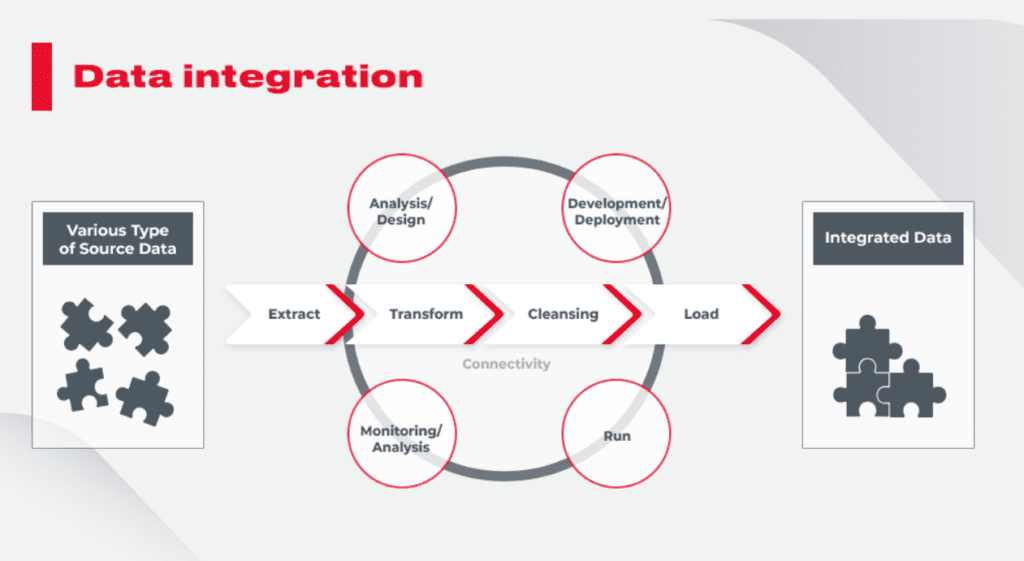

To combine and harmonize data from all these different sources requires data integration. Data integration is the process of taking data in all other formats and bringing them together into a single unified view that can be accessed and analyzed by users in your organization.

The data integration process involves extracting data from its original source(s), transforming it into a standardized format, monitoring data quality, cleansing bad or suspect data, and loading it into easily accessible secure storage. All available data must be confidently used for reporting, analysis, and decision-making.

Primary Challenges of Data Integration

Integrating large volumes of data can be a challenging process. In particular, pay attention to the following potential issues:

- Ensuring adequate data quality

- Mapping and transformation data from multiple formats and structures

- Integrating data that is ingested in real-time

- Dealing with data bottlenecks caused by ingesting large volumes of data at high speeds

- Understanding and managing complex relationships between multiple datasets

- Ensuring a high level of data security

- Integrating data from legacy systems

- Building a system that easily scales as your data sources and needs grow

- Monitoring and maintaining the data ingestion process

Best Practices for Data Integration

To overcome these potential challenges, your organization needs to employ a series of best practices for data integration. Following these six best practices will ensure that your data integration is successful and that you have high-quality data available for your business operations.

1. Define Clear Business Goals

Before you decide on a data integration solution, you need to set goals for what you want to accomplish. You need a clear idea of what you hope to achieve with the data integration before you can build an appropriate solution.

This means setting both short- and long-term objectives in terms of both quantifiable and more subjective metrics. Look at ROI, yes, but also look at how the data integration will improve your business processes and decision making.

2. Understand the Data You’re Integrating

How well do you know the data you intend to integrate? You need to understand each data source’s structure, format, semantics, and contents. You need to know where the data comes from to understand its strengths and weaknesses. The better you understand your data and data sources, the more appropriate you can fine-tune your data integration process.

3. Employ Robust Data Quality Management

Data quality management is essential to effective data integration. You need to evaluate the quality of data from all sources before its integrated, while its being integrated, and after integration is complete. Poor-quality data needs to be identified and isolated for additional action so that your systems are dealing only with reliable high-quality data. Inaccurate, incomplete, inconsistent data can often be dealt with—inaccurate data can be fixed, incomplete data can be added to, inconsistent data rationalized. Data that can’t be cleansed entirely can be deleted. The goal is to ensure that the highest-quality data flows through the system, which you accomplish with robust data quality management tools, such as FirstEigen’s DataBuck.

4. Implement Strict Data Governance

Effective data governance helps you manage the availability and usability of the data you collect. To ensure data governance that works for you, consider the following:

- Create a data catalog using detailed metadata about the data being integrated

- Employ data lineage tracking to ensure the accuracy of the data as it flows through the pipeline from its initial source to its final destination

- Stress data stewardship to ensure that given teams or individuals are responsible for managing specific data

5. Create a Scalable Solution

Any data integration solution you employ must be able to grow with your organization as it—and its data usage—grows. Your solution must quickly and easily scale from your present needs to your future needs without missing a beat or breaking the bank. You don’t want your data integration system to slow down or clog up as you ingest increasing amounts of data, nor do you want to expend ever larger amounts of money to keep the system up-to-date. You need a solution that can scale either up or down as your data needs evolve.

6. Focus on Data Security

Integrating and storing data isn’t enough. All the data your system ingests must be secured throughout the process to minimize the risk of data breaches, theft, and ransomware attacks.

To enhance your system’s data security, consider employing some or all of the following:

- Data encryption

- Access controls

- Secure data transfer protocols

- Anti-malware tools

- Endpoint protection

- Firewalls

- Multi-factor authentication

Top Data Integration Tools

Perhaps the most effective best practice is choosing the best integration tool for your specific needs. You’ll want to evaluate tools based on a number of factors, including the amount and complexity of your data, how that data is ingested (batch or streaming), the types of data sources you used, overall performance, ease of use, and cost.

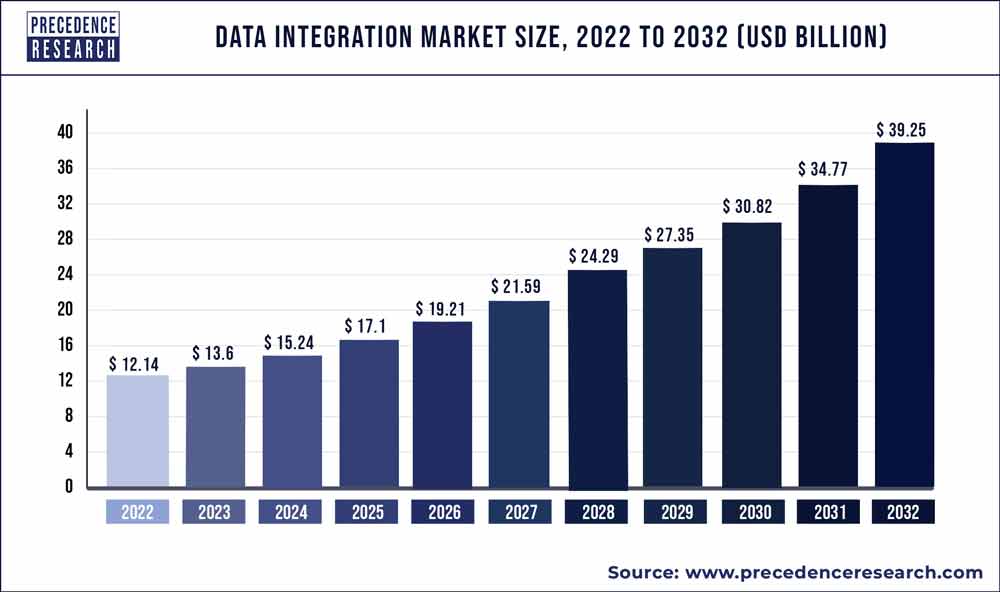

With that in mind, many widespread data integration tools are available to organizations today. These tools are driving a significant growth in data integration market, which is expected to grow at a compound annual growth rate of 12.5% from 2023 to 2032.

The top data integration tools today include:

- Apache NiFi, an open-source solution

- AWS Glue, Amazon’s managed integration service, part of the Amazon Web Services solution

- Denodo, a data visualization platform that integrates data from multiple sources

- IBM InfoSphere Information Server, IBM’s comprehensive data integration solution for large-scale projects

- Informatica PowerCenter, a robust data integration platform that offers useful features such as data profiling and metadata management

- Microsoft SQL Server Integration Services, Microsoft’s solution that integrates data from multiple sources into SQL Server databases

- Pentaho Data Integration, an open-source data integration tool that also offers data warehousing and reporting

- Qlik Replicate, a data integration and replication tool that works across multiple platforms

- SAP Data Services, a popular data integration that supports both batch processing and real-time integration

- SnapLogic, a cloud-based integration platform that works across cloud and on-premises data sources

- Talend, an open-source data integration platform that supports both on-premises and cloud deployments

DataBuck Ensures Clean Data for Effective Data Integration

Whichever data integration tool you choose, you can improve its effectiveness by ensuring a stream of reliably high-quality data. This is best achieved by monitoring all ingested and internally created data with DataBuck from FirstEigen. DataBuck is an autonomous data quality monitoring solution that employs artificial intelligence and machine learning to monitor and clean integrated data in real-time. The result? You get the consistent data quality your organization needs for effective data integration.

Contact FirstEigen today to learn more about data integration quality.

Check out these articles on Data Trustability, Observability, and Data Quality.