Seth Rao

CEO at FirstEigen

Top 5 Challenges of Data Validation in Databricks and How to Overcome Them

Databricks data validation is a critical step in the data analysis process, especially considering the growing reliance on big data and AI. While Databricks offers a powerful platform for data processing and analytics, flawed data can lead to inaccurate results and misleading conclusions. Here’s how to ensure your Databricks data is trustworthy and ready for analysis:

Understanding the Challenges:

Data validation in Databricks comes with its own set of hurdles. Here are some of the most common ones:

- Data Volume and Complexity: Big data sets can be overwhelming to validate manually. Complex data structures with nested elements further complicate the process. Studies show that data quality issues cost businesses in the US an estimated $3.1 trillion annually [source: IBM study on data quality].

- Data Source Variability: Data can come from various sources, each with its own format and potential inconsistencies. A 2021 survey by Experian revealed that 88% of businesses struggle with data quality issues stemming from inconsistent data sources.

- Evolving Schemas: Data schemas, the blueprints for organizing data, can change over time. Keeping validation rules current with schema updates can be challenging.

- Stateful Data Validation: Traditional validation approaches often require tracking data state, which can become complex in large-scale data pipelines.

Overcoming the Obstacles:

Databricks offers built-in features and allows integration with external tools to tackle these challenges:

- Leverage Delta Lake: Databricks’ Delta Lake provides schema enforcement and ACID transactions, ensuring data consistency and reliability. Studies by Databricks customers show that Delta Lake can reduce data processing times by up to 10x.

- Embrace Parallel Processing: Databricks’ parallel processing capabilities enable efficient validation across large datasets. This can significantly reduce validation times compared to traditional methods.

- Integrate External Tools: Consider tools like DataBuck that seamlessly integrate with Databricks and offer functionalities like automated checks, AI-powered anomaly detection, and centralized rule management.

By implementing these strategies, you can significantly improve data quality and ensure your Databricks analysis is built on a solid foundation of trust.

Why Data Validation in Databricks Matters: Avoiding the Pitfalls of Unreliable Data

In today’s data-driven world, the quality of your information is paramount. Databricks, a powerful platform for data processing and analytics, offers immense potential. But even with Databricks, if your data is inaccurate or inconsistent, your entire analysis can be thrown off course. Data validation acts as a safety net, ensuring the information you’re working with is reliable and trustworthy.

Here’s why data validation in Databricks is crucial:

- Accurate Results

- Improved Decision-Making

- Saved Time and Resources

- Enhanced Collaboration

- Regulatory Compliance

By taking data validation seriously in Databricks, you can avoid the pitfalls of unreliable data and unlock the true value of your information. Clean, accurate data is the foundation for sound decision-making, improved efficiency, and overall success in today’s data-driven world.

Stop Struggling with Data Validation in Databricks: Let DataBuck Be Your Solution

Are you spending too much time cleaning and validating data in Databricks? Frustrated by errors and inconsistencies throwing off your analysis? DataBuck can help!

DataBuck is a powerful tool designed specifically to address data validation challenges in Databricks. Here’s how DataBuck can transform your experience:

- Effortless Integration: DataBuck integrates seamlessly with your existing Databricks workflow. No need to learn new systems or disrupt your current processes.

- Automated Validation: Say goodbye to manual data checks! DataBuck automates the validation process, saving you significant time and effort.

- AI-Powered Insights: Leveraging artificial intelligence, DataBuck goes beyond basic checks to identify hidden patterns and potential data quality issues you might miss.

- Improved Data Quality: DataBuck helps ensure the accuracy and consistency of your data, leading to more reliable results and better decision-making.

- Faster Time to Insights: With automated validation and improved data quality, DataBuck helps you get to meaningful insights quicker.

Achieve 90% Faster Data Validation in Databricks With AI-driven Data Trust Score

Validation Challenges in Databricks and Strategies to Overcome Them

Databricks is one of the more capable data management platforms available. Nevertheless, no platform can anticipate the requirements of every data processing scenario, and even experienced users will hit a snag now and again. Here are five of the common challenges.

1. Data Volume and Complexity

The advances in cloud computing, data modeling methods, and compression algorithms have fueled a data surge in business applications over the last decade. As datasets get larger, they morph into more complex and heterogeneous entities. Every terabyte processed means millions of data points, each with potential inconsistencies or errors.

Furthermore, large datasets often contain multiple structure types, ranging from simple flat files to intricate, multi-layered formats like JSON or Parquet. Validating such datasets not only demands significant computational power but also precise methodologies that can navigate the often-convoluted pathways of modern data architectures.

Solution: Combat this using Databricks’ inherent parallel processing strengths. This involves logically dividing the dataset into smaller segments and validating them concurrently across multiple nodes. Simultaneously, create an expansive library of user-defined functions (UDFs) tailored to diverse data structures.

Keeping these functions updated ensures robust and agile validation, ready to adapt to emerging data complexities. Collaborative team reviews of these UDFs, combined with the adoption of advanced data parsing techniques, guarantee thorough validation regardless of volume or structural intricacy.

2. Data Source Variability

With business growth comes a proliferation of data sources. Modern companies rely on combinations of sources, ranging from relational databases to IoT feeds and real-time data streams. This diversity of data sources comes with validation challenges. Each data source introduces its own syntax, formatting, and potential anomalies, leading to inaccuracies, redundancies, and the dreaded “garbage in, garbage out” phenomenon.

Solution: Within the Databricks environment, build a regimented ingestion framework. This cohesive pipeline should act as the gatekeeper, scrutinizing, cleansing, and transforming every bit of data funneled through it. Establish preprocessing routines that standardize data formats, nullify inconsistencies, and enhance overall data integrity.

3. Evolving Schemas

In modern data processing, schemas are much less rigid than their predecessors serving traditional backend relational databases. While this flexibility ensures alignment with contemporary requirements, it complicates validation. A validation rule effective today might be obsolete tomorrow due to subtle schema alterations. This fluid landscape necessitates a proactive, forward-looking validation approach.

Solution: Anchor your strategy in a twofold schema management system. One edge is a comprehensive schema registry, meticulously documenting every schema iteration. The other is an agile version control mechanism for categorizing changes.

With this two-pronged approach, validation teams can anticipate schema changes and recalibrate their rules accordingly. Regular audits, combined with automated schema change alerts, will ensure that the validation logic remains consistent and synchronous with the evolving data structures.

4. Stateful Data Validation

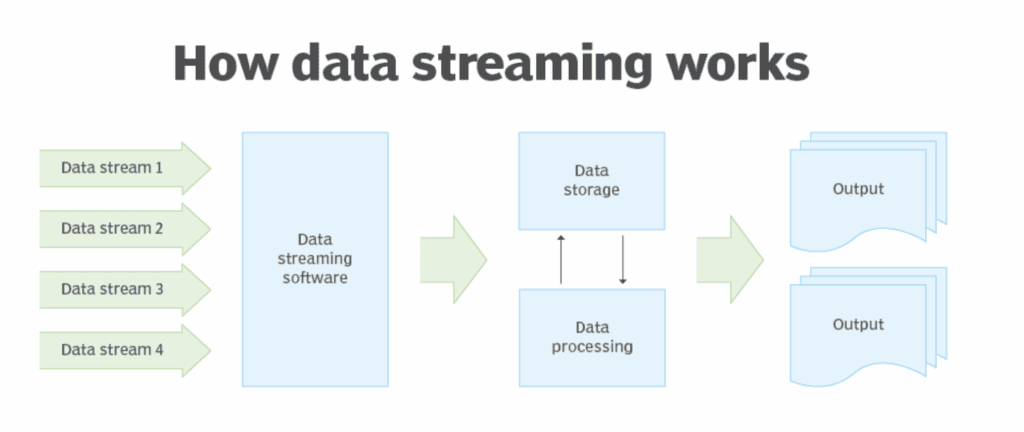

With the rise of real-time analytics, data streaming has become an essential data management capability. Unlike static databases, streaming data updates continually, necessitating state-aware validation. In data streaming environments, ensuring an object’s consistent representation across data bursts becomes difficult.

Solution: Leverage the features of Databricks’ Delta Lake. Lauded for its ACID compliance, Delta Lake excels in providing data consistency in variable streaming feeds. By integrating Delta Lake, you can maintain a consistent data snapshot, even when updates spike in frequency. This consistent data image becomes your foundation for stateful validation. Further, you can implement features like:

- Checkpoints

- Real-time tracking algorithms

- Temporal data markers

With these measures, you can guarantee your validations remain accurate and reliable, regardless of the data’s transient nature.

5. Tool and Framework Limitations

Applications like Databricks rarely operate in isolation. Businesses that process large data volumes will also use several other specialized tools and frameworks, each excelling in specific niches. At first glance, introducing an external tool into the Databricks environment may seem like integrating a cog into a well-oiled machine. However, potential compatibility issues, overlapping functionalities, and the constant need for updates can strain ongoing operations, leading to efficiency drops and occasional malfunctions. Finding the perfect balance between leveraging Databricks’ native capabilities and supplementing with features from other platforms can become challenging.

Solution: To integrate external tools with Databricks, institute a centralized validation tool repository within the platform. This repository should house a collection of pre-vetted, compatible, and regularly updated tools and libraries. Beyond simple storage, this hub should facilitate:

- Collaborative tool evaluations

- Performance benchmarking

- Feedback loops

With such a repository, teams can confidently reach for the right tool, assured of its compatibility within the Databricks ecosystem.

Data validation in Azure Databricks

Building on the challenges and solutions discussed earlier, let’s specifically explore data validation within the context of Azure Databricks. As a managed cloud service offering of the Databricks platform, Azure Databricks inherits the core functionalities and integrates seamlessly with Azure cloud services. This section dives into how Azure Databricks’ features can be leveraged for effective data validation.

Utilizing Azure Databricks’ Strengths:

Delta Lake Integration: Azure Databricks integrates with Delta Lake, enforcing data schema and offering data consistency during writes. This reduces inconsistencies in your pipelines.

Parallel Processing: Azure Databricks leverages Apache Spark’s parallel processing, allowing you to efficiently validate large datasets across multiple nodes, significantly reducing processing time.

Azure Databricks Notebooks: These notebooks provide a flexible environment for defining custom validation logic using Spark SQL functions and Python libraries.

Security and Monitoring:

- Security: Leverage Azure Databricks’ role-based access control (RBAC) to restrict access to sensitive data during validation.

- Monitoring: Set up monitoring tools to track validation failures and receive alerts for data quality issues. This allows for prompt intervention.

By effectively utilizing these capabilities, Azure Databricks empowers you to build a robust data validation framework, ensuring clean and reliable data for insightful analysis. The next section will explore how to build such a framework within Azure Databricks.

Data Validation vs Data Quality in Databricks

Data validation and data quality in Databricks are closely related, but they’re not the same thing. Here’s a quick breakdown:

- Data Validation: This is the process of checking your data against specific rules to ensure it meets certain criteria. Think of it like an inspector checking if a product meets safety standards before it goes on sale. In Databricks, you might validate data to check for missing values, ensure it’s in the correct format (e.g., dates), or fall within a specific range.

- Data Quality: This is the overall health of your data. It considers factors like accuracy, completeness, consistency, and timeliness. Data quality is like the overall condition of the product – is it safe, reliable, and meets customer needs? Good data validation helps ensure good data quality in Databricks.

By implementing effective data validation practices, you can significantly improve your data quality, leading to more reliable analysis and better decision-making.

The next section dives into advanced techniques you can use to take your data validation in Databricks to the next level.

Difference Between Data Validation vs Data Quality in Databricks

| Feature | Data Validation | Data Quality |

| Focus | Checking data against specific rules | Overall health of your data |

| Analogy | Inspector checking product safety | Overall product condition |

| Examples in Databricks | Checking for missing values, ensuring correct format (dates), verifying value ranges | Accuracy, completeness, consistency, timeliness |

| Goal | Ensure data meets specific criteria | Improve data reliability and usefulness |

Leverage AI/ML for Powerful Data Validation

While traditional techniques like checking for missing values and ensuring proper formatting are crucial, Databricks offers advanced functionalities for even more powerful data validation:

Machine Learning for Anomaly Detection

Machine learning (ML) algorithms can be trained on clean data to identify patterns and deviations in new data sets. This allows you to detect anomalies – unexpected values or data points that fall outside the normal range – that might slip through basic validation checks. For instance, an ML model trained on historical sales data can identify sudden spikes or dips in sales figures, potentially indicating errors or fraudulent activity.

Integrating External Data Sources

Data validation can be strengthened by referencing external sources of reliable information. Imagine validating customer addresses – you can integrate with a postal service database to confirm address accuracy. This adds an extra layer of confidence to your data quality checks.

Automating Data Profiling

Data profiling involves summarizing key characteristics of your data, such as data types, value distributions, and presence of null values. Automating this process using Databricks tools helps you gain a quick understanding of your data’s overall health and identify potential issues early on.

Unstructured Data Quality Validation Techniques

While traditional methods work well for structured data (data organized in tables with defined columns), unstructured data (text, images, etc.) requires different approaches. Techniques like sentiment analysis for text data or anomaly detection for images can help ensure the quality of these less-structured formats.

The next sections will delve deeper into each of these advanced techniques, providing practical guidance on how to implement them within your Databricks workflow.

Building a Comprehensive Data Validation Framework in Databricks

Having explored the importance of data validation and various techniques, let’s delve into building a robust data validation framework within Databricks. This framework will ensure your data is consistently checked and meets your quality standards.

Defining Validation Rules

Defining clear and well-defined validation rules is the cornerstone of your framework. These rules specify the criteria your data needs to meet. Here’s how to approach this:

- Identify Data Quality Requirements: Start by understanding the specific needs of your data analysis. What kind of data accuracy and consistency is crucial for your use case?

- Translate Requirements to Rules: Once you understand the requirements, translate them into actionable rules. For example, a rule might state that no customer ID can be missing (completeness) or that a specific date field must be in YYYY-MM-DD format.

- Document Your Rules: Clearly document your validation rules for easy reference and maintenance. This ensures everyone working with the data is aware of the quality expectations.

Implementing Validation Logic in Databricks Notebooks

Databricks notebooks offer a flexible environment for defining your validation logic. You can leverage Spark SQL functions and Python libraries to write code that checks your data against your defined rules. For instance, you can use Spark SQL functions to check for missing values or perform basic data type validations.

Scheduling Validation Checks

Manual data validation isn’t sustainable. Schedule regular validation checks as part of your data pipelines. Databricks allows you to set up automated jobs that run your validation logic periodically, ensuring your data quality is continuously monitored.

Reporting on Data Quality Metrics

Data validation generates valuable information about your data health. Capture and report on key data quality metrics like the percentage of missing values, data type inconsistencies, or anomalies detected. These reports provide insights into areas that need improvement and help track the overall effectiveness of your validation framework.

Data Quality Validation Rules and Best Practices

Developing a set of best practices for defining and managing your data quality validation rules is crucial. Here are some key points to consider:

- Focus on Critical Data: Prioritize validation rules for data elements that significantly impact your analysis.

- Maintainable Rules: Keep your rules clear, concise, and easy to understand for future maintenance.

- Balance Efficiency and Comprehensiveness: Strive for a balance between thorough validation and efficient execution time within your pipelines.

- Regular Review and Updates: Regularly review and update your validation rules to reflect changes in data formats or quality requirements.

By following these steps and best practices, you can build a robust data validation framework in Databricks, ensuring your data analysis is built on a foundation of trust and accuracy. The next section will discuss some additional considerations for handling data validation errors and maintaining a high level of data integrity.

DataBuck: Your Powerful Ally in Automated Databricks Validation

While we’ve covered essential data validation techniques, manual processes can be time-consuming and error-prone. This is where DataBuck comes in – a powerful tool designed to automate and simplify data validation within your Databricks environment.

1. Streamlined Workflow Integration

DataBuck integrates seamlessly with your existing Databricks workflows. No need to learn new systems or disrupt your current data pipelines. Simply add DataBuck’s pre-built validation checks to your notebooks or jobs, and it takes care of the rest. This reduces setup time and ensures your validation process is smoothly integrated with your overall data analysis tasks. FirstEigen’s Databricks services can help with implementing DataBuck into your workflow.

2. AI/ML-Powered Checks

DataBuck goes beyond basic validation rules. It leverages artificial intelligence and machine learning (AI/ML) to identify hidden patterns and potential data quality issues that might escape traditional checks. For instance, DataBuck’s AI models can learn from your historical data to detect anomalies or suspicious data points that deviate from expected patterns. This advanced layer of validation significantly improves the overall accuracy and reliability of your data.

3. Autonomous Validation and Alerting

DataBuck automates the entire validation process, freeing you from manual data checks. Schedule automated validation runs as part of your Databricks jobs, and DataBuck will handle the execution. In addition, DataBuck can be configured to send automated alerts when validation errors or quality issues are detected. This allows you to take prompt action and address data quality problems before they impact your analysis.

4. Scalability and Performance

As your data volume grows, DataBuck scales effortlessly to handle the demands of big data validation. Its architecture ensures efficient performance even with large datasets, minimizing the impact on your Databricks cluster resources. This allows you to validate your data efficiently without compromising the speed of your data pipelines.

By leveraging DataBuck’s automation, AI/ML capabilities, and scalability, you can significantly improve the efficiency and effectiveness of your data validation efforts in Databricks. This frees up valuable time and resources for data scientists and analysts to focus on more strategic tasks, ultimately leading to better data-driven decision making.

Validate 1,000+ Tables Effortlessly with DataBuck's AI-Driven Tools

Elevate Your Organization’s Data Quality with DataBuck by FirstEigen

DataBuck enables autonomous data quality validation, catching 100% of systems risks and minimizing the need for manual intervention. With 1000s of validation checks powered by AI/ML, DataBuck allows businesses to validate entire databases and schemas in minutes rather than hours or days.

To learn more about DataBuck and schedule a demo, contact FirstEigen today.

Check out these articles on Data Trustability, Observability & Data Quality Management-

- Databricks Integration

- Best Practices for Data Quality Management

- Data Catalog Tools for Enterprises

- Data Quality Management Using Databricks Validation

- Data Quality Checks Best Practices

- Data Quality Monitoring Techniques

- Service Level Agreement Metrics

- Data Integrity Migration

- Complex Data Analytics

- Data Validation Automation

- Difference Between Monitoring and Observability

- Enterprise Data Management Services

- Azure Data Quality Service

- Data Quality Machine Learning

- Data Integrity Issues

- IoT Analytics Integration

Did You Find This Helpful?

Thanks you for response.

FAQs

For real-time data streams, consider using lightweight validation checks that can be executed quickly without significantly impacting processing speed. Stream processing frameworks like Apache Spark Streaming offer libraries for real-time data validation.

- Define data quality thresholds: Determine acceptable error rates for different data elements.

- Implement data cleansing routines: Automate processes to fix or remove erroneous data based on predefined rules.

- Alert and investigate: Set up alerts for critical validation errors and investigate root causes to prevent future occurrences.

- Track cost savings: Quantify cost reductions from improved data quality, such as avoiding rework due to errors.

- Measure efficiency gains: Track time saved by data scientists due to fewer data quality issues.

- Evaluate improved decision-making: Consider the value of more accurate insights based on reliable data.

Yes! DataBuck provides detailed reports on validation failures, including the location and nature of errors. This helps pinpoint the root cause of data quality issues, allowing for faster debugging and remediation.

Several data validation tools integrate with Databricks, offering functionalities like automated checks, data profiling, and anomaly detection. Some popular options include tools like DataBuck, Collibra, and Informatica.

Discover How Fortune 500 Companies Use DataBuck to Cut Data Validation Costs by 50%

Recent Posts

Get Started!

Would you like to tell us why?