Autonomous Data Quality Validation with DataBuck

Eliminate unexpected data issues

Why Monitor Data Quality?

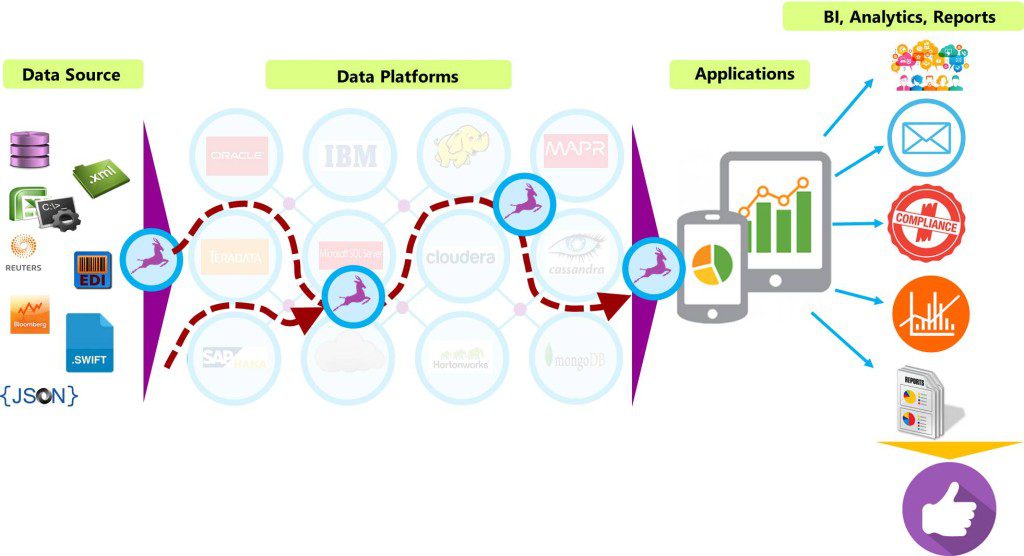

Error creeps into data every step along the way as it makes it way to the business and analytics team for usage and insights. Companies are seeing an increase in data volume, data complexity, number of data sources and number of platforms (Lake, Cloud, Cloud Warehouses, Hadoop).

Challenges

Traditional data validation solutions:

- Were built for Data Stewards, not for Data Engineers, or Data Governance, or Data Management teams.

- They were built for writing rules for every table individually one by one, not built for automation or scaling.

- Are costly to scale and difficult to maintain.

Our approach to Data Quality

Autonomous Data Quality - powered by ML

DataBuck is an automated data validation and monitoring tool, powered by Machine Learning.

- Auto recommends the essential, baseline set of rules to validate any dataset.

- Write additional custom rules to top off the essential recommendations.

- Extremely secure- Moves the rules to where the data is and does not move the data.

- The business users and Data Stewards can edit data validation checks in a few clicks from the UI.

Benefits:

Accomplish 2 man-years worth of validation in 8 hours

DataBuck has delivered these results for its customers:

Top-3 US Bank with Over $1.5 Trillion in assets, reduced operational and regulatory reporting risk by leveraging DataBuck to monitor 15,000+ data assets.

Top 3 global Networking Equipment provider reduced financial reporting risk by leveraging DataBuck to monitor 800+ data assets in its financial data warehouse and reconciling financial information.

Top-3 Bank in Africa reduces financial crime risk by leveraging DataBuck to monitor client data spanning over 300 data assets in its Data Lake.

Top 3 Telemedicine and Healthcare company reduces data risk and transforms its data pipeline by leveraging DataBuck to monitor eligibility files received from 250+ Hospitals comprising of millions of records in real time.

Leading media streaming company reduces revenue risk by leveraging DataBuck to monitor customer, prospect, and account data in near real time.

A multinational bank operating in 10 different countries reduced its regulatory compliance risk by configuring DataBuck to improve the completeness and accuracy of KYC data.

A life insurance and Annuities Company was able to save computation time by more than 67% while ensuring referential integrity, completeness of the data movement, and the logic of financial numbers with the help of DataBuck.

A pharma company was receiving over 70 different sources of data from several healthcare providers. DataBuck’s out-of-the-box, pharma-specific capabilities solved their top-10 needs for pharmaceutical data validation.

Do your business partners question your credibility in providing error free data?

See how DataBuck Leverages AI/ML for Superior Data Validation

What Data Sources Can it Work With:

DataBuck can accept data from all major data sources, including Hadoop, Cloudera, Hortonworks, MapR, HBase, Hive, MongoDB, Cassandra, Datastax, HP Vertica, Teradata, Oracle, MySQL, MS SQL, SAP, Amazon AWS, MS Azure, and more.