Posts by Angsuman Dutta

Managing Tariff Implications Through Data Integrity in Global Supply Chains

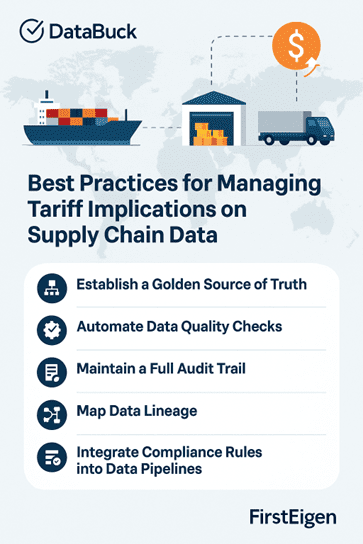

In today’s global marketplace, supply chains span continents. From consumer electronics to industrial machinery, companies rely on global sourcing and distribution to stay competitive. But with this reach comes complexity—especially when it comes to tariffs.

Read MoreAgentic Data Trust: Next Frontier for Data Management

As data grows exponentially, ensuring accuracy, security, and compliance is increasingly challenging. Traditional rule-based data quality checks—whether downstream (reactive) or upstream (proactive)—still produce substantial manual overhead for monitoring and resolving alerts. Agentic Data Trust addresses these issues by leveraging intelligent agents that minimize human intervention through automated oversight, rule updates, and data corrections. The result…

Read More5 Emerging Data Trust Trends to Watch in 2025

As organizations accelerate their data-driven initiatives, data quality is evolving from a manual, back-office function to a core business priority. By 2025, we’ll see a new generation of data quality capabilities seamlessly integrated into analytics pipelines, AI models, and decision-making frameworks. Here are five emerging trends reshaping the future of data quality: 1. Data Trust…

Read MoreChallenges With Data Observability Platforms and How to Overcome Them

Core Differences Between Data Observability Platforms and DataBuck Many organizations that initially embraced data observability platforms are now realizing the limitations of these solutions, especially as they encounter operational challenges. Although data observability platforms started strong—tracking data freshness, schema changes, and volume fluctuations—their expansion into deeper profiling has created significant drawbacks. Below, we explore the…

Read MoreDitch ‘Spray and Pray’: Build Data Trust With DataBuck for Accurate Executive Reporting

In the world of modern data management, many organizations have adopted data observability solutions to improve their data quality. Initially, these solutions had a narrow focus on key areas such as detecting data freshness, schema changes, and volume fluctuations. This worked well for the early stages of data quality management, giving teams visibility into the…

Read MoreData Errors Are Costing Financial Services Millions and How Automation Can Save the Day?

Data quality issues continue to plague financial services organizations, resulting in costly fines, operational inefficiencies, and damage to reputations. Even industry leaders like Charles Schwab and Citibank have been severely impacted by poor data management, revealing the urgent need for more effective data quality processes across the sector. Key Examples of Data Quality Failures: These…

Read MoreData Integrity Issues in Banking: Major Compliance Challenges and Solutions

What Are Data Integrity Issues in Banking? Banks face a high cost when data errors slip through due to inadequate data control. Examples include fines for TD Bank, Wells Fargo, and Citigroup due to failures in anti-money laundering controls and data management. The main reasons for these data issues include non-scalable data processes, unrealistic expectations…

Read MoreEmpowering Data Excellence: the Role of Cloudera Data Lake, Features & Benefits.

In today’s data-driven world, organizations are collecting more information than ever before. But the true value of data lies not just in its quantity, but in its quality and trustworthiness. This is especially true for vast data repositories like Cloudera Data Lake. This guide explores Cloudera Data Lake, a platform designed to store and manage…

Read MoreData Trust Scores and Circuit Breakers: Ensuring Robust Data Pipeline Integrity

Data Pipeline Circuit Breakers: Ensuring Data Trust With Unity Catalog In the fast-paced world of data-driven decision-making, the integrity and reliability of your data are paramount. Data Pipeline Circuit Breakers play a pivotal role in ensuring that data flows smoothly from source to destination, facilitating accurate analytics and informed decision-making. However, even the most robust…

Read MoreBenefits of Complementing Informatica with a Data Quality Add-On

How important is data quality to your organization? Accurate, reliable data is imperative for smooth operations and informed business decisions. If your business uses Informatica for data integration, you may find its built-in data quality management tools lacking in both effectiveness and ease of use. To obtain more accurate data with less technical effort, consider…

Read More