Seth Rao

CEO at FirstEigen

5 Essential ETL Tools to Solve Big Data Challenges, Automate Processes, & Improve Data Quality Monitoring

Getting your ducks in a row is easier than managing the sprawling datasets that organizations have to juggle daily. Uncovering insights into business performance, determining the right growth strategy, and understanding where the next opportunity for innovation resides all depend on having quality data available from a variety of sources. Extract, transform, and load (ETL) tools are an essential cog in the wheel of modern data pipelines.

ETL tools are available in a range of different flavors and implementations, each with its own benefits and drawbacks. Most organizations today depend on a variety of business applications to manage operations including HR, CRM, ERP, and discipline-specific systems. Bringing these datasets together to form a holistic view of the business without ETL is an impossible task. Throw in the cloud era and traditional ETL approaches are no longer up to the challenge either.

To help you navigate the muddy waters that big data and decentralized operations bring to modern organizations, let’s look at the ETL tools and approaches you cannot do without in 2022.

Key Takeaways

- ETL tools have come a long way since running custom queries and scripts manually for each application

- With modern integrations to third-party visualization and validation systems, today’s ETL tool features enable large processing of decentralized datasets

- Enterprise and open-source ETL tools make it possible for any organization to ingest, validate, and analyze their data including automated data quality monitoring

Why New ETL Tools and Approaches are Necessary?

The move from on-premise to cloud operations put traditional ETL tools and approaches under immense pressure. The painstaking process of adapting ETL rules to accommodate new data sources, formats, and relationships in today’s hyper-connected world cannot keep up when using outdated mapping techniques.

Some of the major concerns with traditional ETL tools are:

- Relies on custom-coded programs or scripts that become specialized within an organization

- Requires lengthy reengineering whenever the data model changes or a new application enters the technology stack

- Depends on a rigid process where data stewards need to sign off on every new integration and its ETL rules

- Cannot support continuous data streaming and transfers between systems automatically

How to Evaluate ETL Tools?

Newer solutions can integrate with an organization’s data pipeline even in decentralized environments. When evaluating ETL tools, companies need to consider:

- The budget – Smaller organizations may have to skimp on features to stay within budget

- The use cases – Establish exactly how the process will look before analyzing the features of the tool

- Interoperability – Some ETL tools will only work with certain data sources and repositories while others are vendor-agnostic

- The UX/UI – No or low code systems are easier to deploy, maintain, and adapt as the data pipeline expands

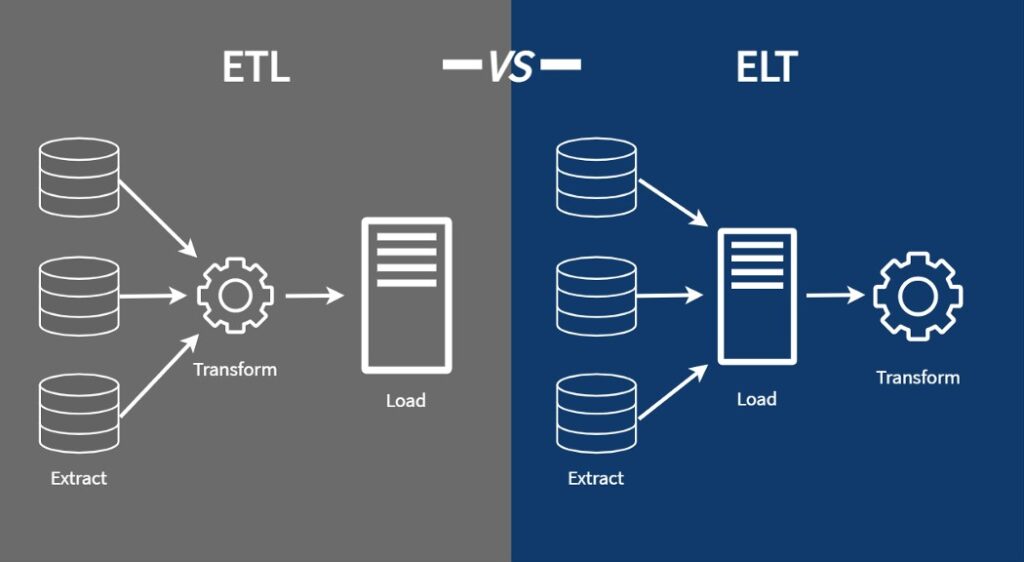

Additionally, there is a shift from ETL to ELT (extract, load, transform) where the transformation process takes place in the target system after ingestion. This approach also has dedicated solutions that may be a better fit for the organization’s operational structure.

5 ETL Tools to Consider in 2022

Choosing the best ETL or ELT tool will require careful consideration. Below, we look at five of the best solutions available today.

1. IBM DataStage

Built for performance, IBM DataStage uses a client/server design with cloud and on-premise deployments available. Users can create and execute tasks against a central repository with data integrations for multiple sources. You can try IBM DataStage with a free trial before deciding which plan you want to take.

2. Hevo

Hevo is a Fully Automated, No-code Data Pipeline Platform that supports 150+ ready-to-use integrations across Databases, SaaS Applications, Cloud Storage, SDKs, and Streaming Services. Try Hevo’s 14-day free trial today and get your fully managed data pipelines up and running in just a few minutes.

3. Informatica PowerCenter

Although the UI seems simple in the mapping designer, Informatica PowerCenter is also powerful enough to support the entire enterprise’s ETL processes. It can parse many data formats including JSON, PDF, and XML as well as machine (IoT) data. It’s easy to use and can scale with your business needs when adding more data pipelines without compromising performance. Similar to IBM DataStage, there is a free trial and paid plans available.

4. SAS Data Management

Designed to connect with all data across the data stack including data lakes, cloud, and legacy systems, SAS Data Management empowers non-IT stakeholders to build their own ETL workflows. It has integrations available for third-party modeling tools to help users analyze data using a holistic view of the organization’s business processes. Pricing is only available on request but you can try it before buying.

5. Talend Open Studio

Supported by an open-source community, Talend Open Studio has a drag-and-drop UI to pull jobs from sources like Excel, Oracle, Dropbox, MS Dynamics, and more. As it’s a free solution with built-in connectors to multiple environments, the solution can help organizations quickly set up their data pipelines and start analyzing information from relational databases, SaaS platforms, and other applications.

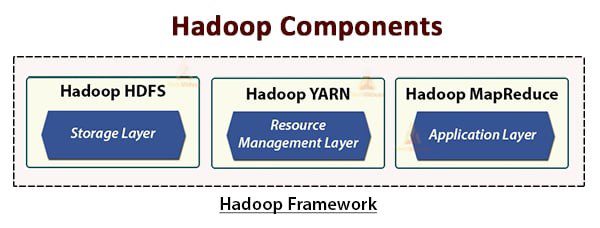

6. Hadoop

For more experienced and technically minded data professionals, Hadoop provides a framework for processing large datasets across a cluster of computers. Different modules provide a range of features including job scheduling, parallel processing, and a distributed file system to extract application data from any application. Hadoop is free and has a variety of related projects such as MapReduce related to the Apache software library.

Automated Data Quality Monitoring With Your ETL Tools

Apart from ETL tools, you’ll also need an automated data quality monitoring (DQM) solution like DataBuck and a data rule discovery system to ensure you can maintain the required data quality across your environment. By combining these tools with your ETL solution and integrating them with your data pipelines, you can improve the trust in your data while empowering your resources to make decisions confidently.

DataBuck automates your data quality validation tasks, speeding up the time to market when adding new data pipelines to your BI and analytical toolsets. SMEs can understand the data quality rules while data stewards can understand where their ETL processes may be failing. With cognitive data quality validation, you can quickly improve your analytical models using AI and ML automation from DataBuck.

If you want to see how DataBuck can help improve your ETL tools and processes, schedule a demo today.

Check out these articles on Data Trustability, Observability & Data Quality Management-

FAQs

Traditional ETL tools struggle to keep up with modern requirements because they rely on manual coding and rigid processes. They often can’t support real-time data processing or integrate smoothly with new cloud-based systems. As businesses move to decentralized data environments, these tools become too slow and inflexible.

When dealing with big data, you need an ETL tool that can process large volumes of data quickly without compromising performance. Look for features like distributed processing, support for multiple data formats, and scalability to ensure the tool can handle growing datasets. Tools like Hadoop and Talend Open Studio excel in these areas.

Yes, open-source ETL tools are a great option for businesses that need flexibility without a large budget. They offer many of the same features as paid solutions, and with the support of an active user community, they can be customized to fit your specific needs. However, open-source tools may require more technical expertise for setup and maintenance.

ETL tools can automate the data cleaning and validation process, ensuring your data is consistent and accurate before it’s loaded into the system. Tools like DataBuck take this a step further by integrating automated data quality checks into the ETL workflow, helping businesses avoid manual errors and improving decision-making.

The biggest challenge is ensuring that the data from different sources is compatible and consistent. ETL tools help by transforming the data into a uniform format, but the process can still be complicated if the tools aren’t capable of handling different data structures, formats, or real-time updates. Choosing a tool with strong integration capabilities and support for varied data types is essential.

The choice depends on your data needs. ETL processes transform data before loading it into the target system, which is useful when you need to ensure data quality before analysis. ELT tools, on the other hand, load data first and transform it within the target system, making them faster for large datasets but more reliant on the processing power of the target system. If speed and performance are critical, ELT might be the better choice.

The key is to choose an ETL tool that supports the data sources and systems you already use. Many modern ETL tools offer a wide range of pre-built connectors for databases, SaaS platforms, and cloud environments. Always check if the tool integrates with your specific applications, or opt for a vendor-agnostic tool that can connect with a variety of systems.

Beyond the initial cost of the software, businesses should consider expenses related to implementation, maintenance, and scaling. Some tools require specialized technical knowledge, which could increase hiring or consulting costs. Also, if your data needs grow, you might need to pay for additional storage or higher-tier plans, especially with cloud-based tools.

DataBuck works alongside your existing ETL tools to ensure that the data being processed is of high quality. It automatically checks your data for accuracy, consistency, and completeness, helping you avoid bad data entering your systems. This not only saves time but also improves the reliability of your data-driven decisions.

No-code ETL tools require no programming knowledge and are usually built with user-friendly interfaces for business users. Low-code tools allow for some level of customization through minimal coding, which is useful if your data processes require more specific configurations. No-code tools are easier to use but may not be as flexible as low-code options.

Discover How Fortune 500 Companies Use DataBuck to Cut Data Validation Costs by 50%

Recent Posts

Get Started!