Angsuman Dutta

CTO, FirstEigen

Establish Autonomous Data Observability and Trustability for AWS Glue Pipeline in 60 Seconds

The Challenge of Ensuring Data Quality in AWS Glue Pipelines

Data operations and engineering teams spend 30-40% of their time firefighting data issues raised by business stakeholders.

A large percentage of these data errors can be attributed to the errors present in the source system or errors that occurred or could have been detected in the data pipeline.

Current data validation approaches for the data pipeline are rule-based – designed to establish data quality rules for one data asset at a time—as a result, there are significant cost issues in implementing these solutions for 1000s of data assets/buckets/containers. Dataset-wise focus often leads to an incomplete set of rules or often not implementing any rules at all.

With the accelerating adoption of AWS Glue as the data pipeline framework of choice, the need for validating data in the data pipeline in real-time has become critical for efficient data operations and to deliver accurate, complete, and timely information.

This blog provides a brief introduction to DataBuck and outlines how to build a robust AWS Glue data pipeline to validate data as data moves along the pipeline.

Introducing DataBuck: Autonomous Data Validation Solution

What is DataBuck?

DataBuck is an advanced autonomous data validation solution purpose-built for validating data in the pipeline. It establishes a data fingerprint for each dataset using its ML algorithm. It then validates the dataset against the fingerprint to detect erroneous transactions. More importantly, it updates the fingerprints as the dataset evolves over time thereby reducing the efforts associated with maintaining the rules.

Key Benefits of DataBuck for AWS Glue:

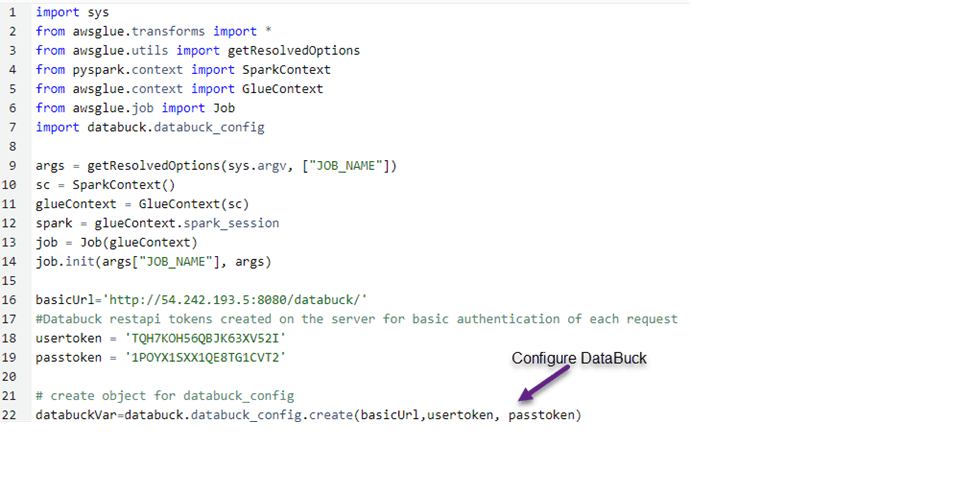

- Effortless Data Validation: Data engineers can easily integrate data validations into their AWS Glue pipelines by invoking a few Python libraries. There’s no need to have a prior understanding of the data or create manual data quality rules.

- Business Stakeholder Control: Business users gain visibility and control over auto-discovered data validation rules and thresholds, fulfilling compliance and audit requirements. Stakeholders can also access a detailed audit trail of data quality over time.

DataBuck’s AI-Powered Data Quality Dimensions

DataBuck leverages machine learning to monitor data through the lens of the following data quality dimensions:

- Freshness: Ensures data is received within the expected time window.

- Completeness: Identifies contextually important fields and checks their completeness.

- Conformity: Validates the format, pattern, and length of critical fields.

- Uniqueness: Confirms the uniqueness of records.

- Drift: Detects changes in categorical and continuous fields compared to historical data.

- Anomalies: Monitors for volume and value anomalies in crucial columns.

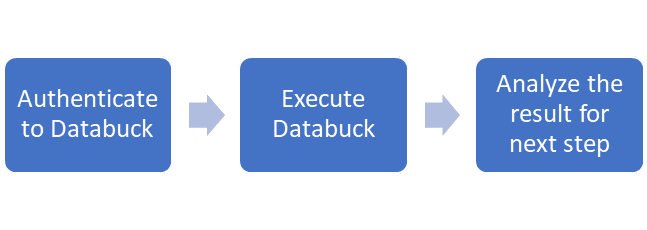

How to Set Up DataBuck for AWS Glue in 3 Simple Steps?

Using DataBuck within Glue job is a three step process as shown in the following diagram

Step 1: Authenticate and Configure DataBuck

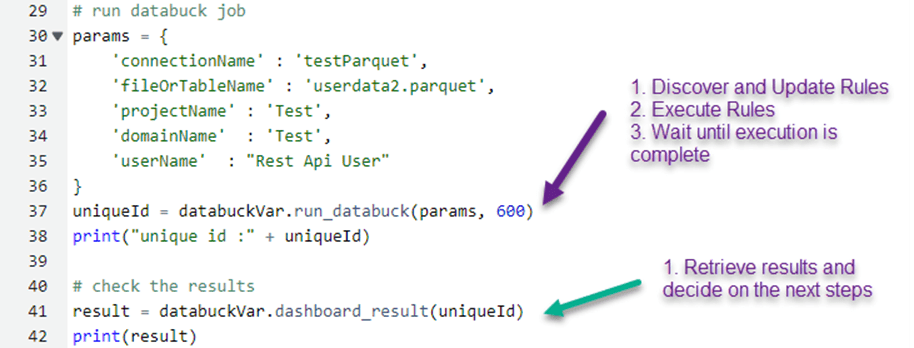

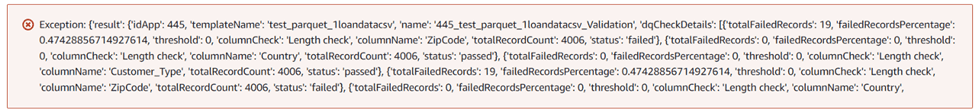

Step 2: Execute DataBuck

Step 3: Analyze the Result for the Next Step

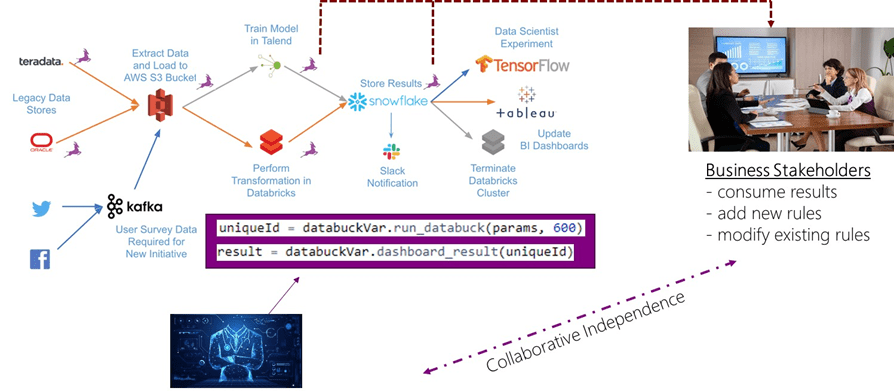

Business Stakeholder Visibility With DataBuck

In addition to providing programmatic access to validate AWS dataset within the Glue Job, DataBuck provides the following results for compliance and audit trail

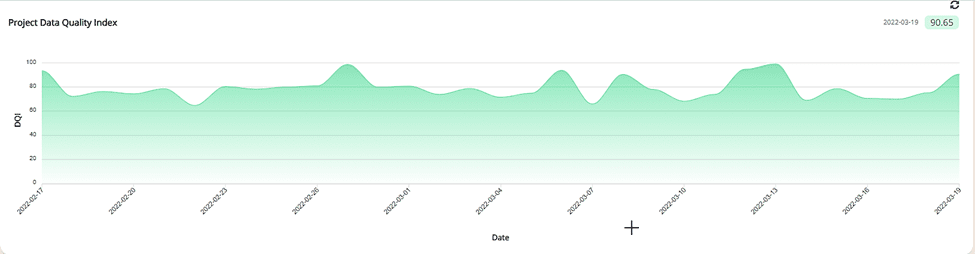

1. Data Quality of a Schema Overtime

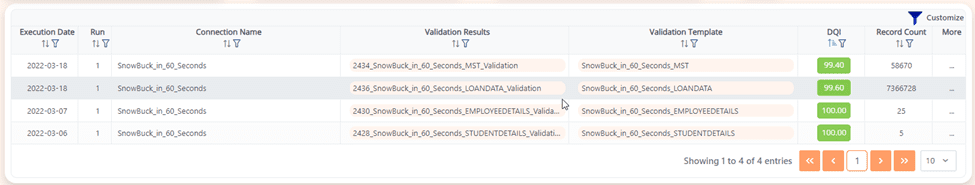

2. Summary Data Quality Results of Each Table

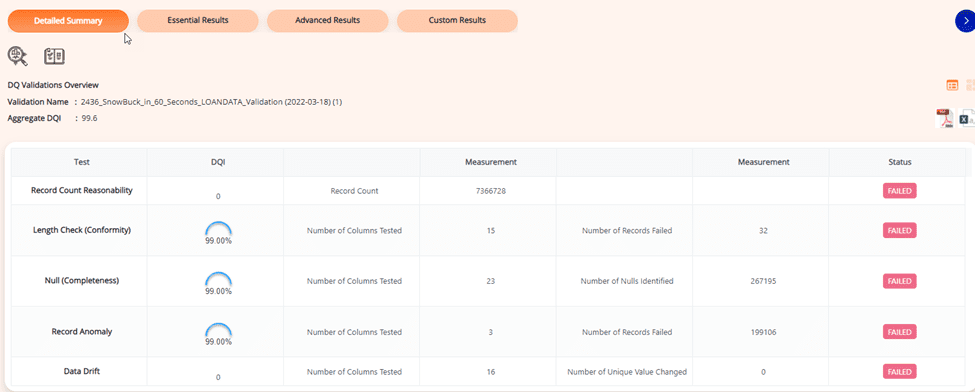

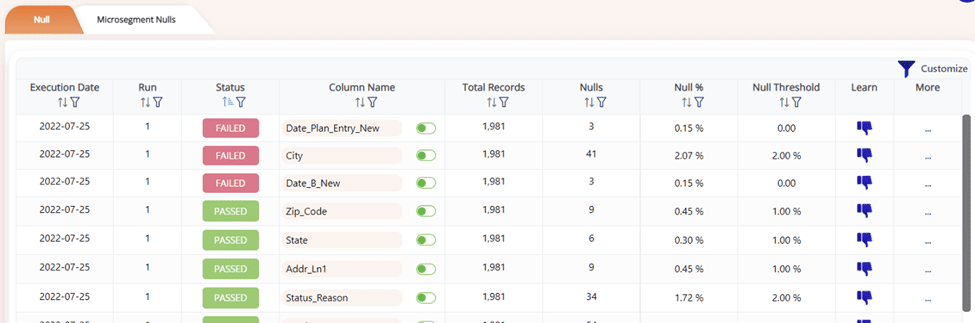

3. Detailed Data Quality Results of Each Table

4. Business Self-Service for Controlling the Rules

Summary: AWS Glue Pipeline Monitoring in 60 Seconds With DataBuck

DataBuck provides a secure and scalable approach to validate data in within the glue job. All it takes is a few lines of code and you can validate the data on an going manner. More importantly, your business stakeholder will have full visibility to the underlying rules and can control the rules and rule threshold using a business user friendly dashboard.

Contact FirstEigen today to learn how to set up DataBuck for AWS Glue Pipeline in 60 Seconds!

Check out these articles on Data Trustability, Observability & Data Quality Management-

FAQs

DataBuck enhances data observability by automatically detecting errors and anomalies as data flows through the AWS Glue Pipeline. It provides real-time insights into data quality, ensuring that business stakeholders can trust the data being processed.

Data trustability is essential because it ensures that the data being used for decision-making is accurate and reliable. Inaccurate data can lead to flawed insights and poor business decisions, making it crucial to validate and trust the data in AWS Glue Pipelines.

While this blog focuses on AWS Glue Pipelines, DataBuck can be integrated with various data pipeline frameworks, providing autonomous data validation across different environments.

Discover How Fortune 500 Companies Use DataBuck to Cut Data Validation Costs by 50%

Recent Posts

Get Started!