Seth Rao

CEO at FirstEigen

3 Top Big Data Uses in Financial Services

Data warehouses, lakes, and cloud services are notoriously error prone. In the financial services (FinServ) sector, this is unacceptable. Unmonitored, unvalidated, and unreliable data places these firms at risk due to the sensitive nature of the big data they manage. If your FinServ firm needs solutions for its dark data issues, you’ll need to learn more about data quality for big data management.

Firms can only validate less than 5% of data to verify it to be clean and accurate, leaving your firm at risk if you make decisions based on the information in the other 95%. Manual processes are unproductive, and errors compound over time, making fixing these errors costly and time-consuming.

Data is vital to FinServ firms, and there are three significant big data case uses where your firm must protect this data at all costs. It can manage this data effectively, but that requires an autonomous solution. Here’s what you need to know.

Key Takeaways

- Financial services are leveraging data in unprecedented ways for greater customer insights and growth. They have thousands of analytical models that need to be fed massive volumes of accurate data to produce reliable results so businesses can make effective decisions.

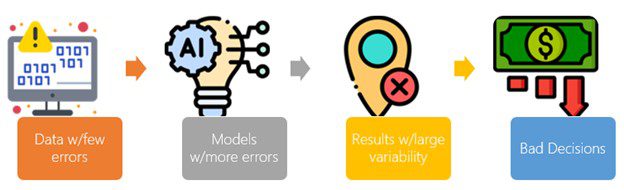

- The financial services industry is crippled by a lack of trust in data. Data in the clouds are notoriously error prone. Every step these data errors move, they get compounded and take ten times the cost and effort to fix it.

- The best companies can only monitor <5% of their data as this is labor intensive. The other 95% is dark data – unmonitored, unvalidated, and unreliable. Automating data quality (DQ) monitoring using artificial intelligence and machine learning (AI/ML) is the only way to get reliable data.

The Importance of Big Data in Financial Services

Analysts estimate that by 2025, approximately 463 exabytes of data will be created every day worldwide. In the big data use cases in the financial services industry, which is constantly evolving, data science is necessary for processing this data. These processes are why big data is increasingly vital in this evolving industry.

There are three distinct areas where big data management is vital in FinServ. For example, banks must be careful with the sensitive data associated with the lending process. Cloud data must not just be accurate but also confidential.

1. Risk Assessment and Management

Corporations are shifting their decision-making processes from humans to machine learning algorithms. This reduces the risk of human error in data while also enhancing efficiency.

FinServ firms can leverage models that predict the risk associated with specific ventures, allowing them the opportunity for a more accurate decision. Additionally, the integration of big data tools enables these firms to process vast amounts of data quickly, providing insights that were previously unattainable. FinServ professionals don’t have to sacrifice quality when doing their due diligence and decision-making analysis.

For example, financial planners determine whether a person can qualify for a mortgage based on credit history and meeting lending criteria. Before AI/ML, these processes were done manually. Pre-built data models can assess individuals and obtain approvals quicker and with more reliability. Without this technology, small data errors eventually lead to bad decisions for businesses and consumers.

2. Fraud Detections and Preventions

Financial institutions use ML to recognize unusual consumer buying habits or practices in real time. Banks can act quickly, reducing losses for businesses and consumers.

For instance, as cybercrime has increased over recent years, banking algorithms prevent anyone from making additional purchases if they detect unusual activity using an affected credit card or bank accounts.

3. Predictive Analytics and Forecast Planning

Data is the most valuable asset a company has. But if your data sets are bad or inaccurate, then those analytics will be completely useless. You will lose any insights provided by that data.

The first step is making sure that everything goes through an integrity check before getting analyzed with any software tools. But, again, manual processes are too time-consuming. Automation is the only effective solution.

Data science also allows for real-time data analysis from either the past or the present. Forecast planning makes it easier for these firms to predict the way the market will go. It also provides insights on which investments are more feasible according to recent trends.

This means, for example, financial firms can use statistics to choose stocks for long-term investments. They can use this data to offer products to clients and also set rates for services.

How Automation Solves Data Errors in Big Data?

Big data can be a powerful tool for financial service companies. Yet, the quality and availability of data often prevent them from using it optimally. Effective big data management enables FinServ firms to locate valuable information even in unstructured data sets, and that requires automation to increase productivity.

Automating the data quality process as part of their data pipeline will improve the company’s ability to meet customer demands. It solves their problems with unreliable data.

Autonomous data monitoring software can catch unreliable data early. There is no need to write rules in case of data errors because these processes are done automatically. Users benefit in the following ways:

- Reports, analytics, and models they can trust

- Lower costs in data maintenance

- Increased in the efficiency of scaling DQ operations

- Boost in productivity

The Upside of Big Data for FinServ Firms

A data-driven approach to FinServ offerings is not new, but it has never been more valuable. As financial firms face growing concerns with big data management from traditional and digital methods, they require automation software tools to ensure data accuracy.

They can reduce risk from big data and increase the productivity of their employees by automating the DQ process as their first step of the data pipeline.

DataBuck by FirstEigen is a digital assembly line for automatically creating DQ validation rules such as data completeness, conformity, uniqueness, etc. DataBuck will not only automate your menial business processes but also continuously improve and update them over time with minimal human intervention.

Schedule a demo today to learn more about how to secure accurate, clean data within the FinServ industry.

Check out these articles on Data Trustability, Observability & Data Quality Management-

FAQs

Big data refers to the vast volumes of structured and unstructured data generated every day. In financial services, it is crucial for gaining insights, enhancing customer experiences, managing risks, and ensuring compliance. Effective big data management helps firms make informed decisions and improves operational efficiency.

Automation enhances data quality by continuously monitoring and validating data, which reduces human error and minimizes the time spent on manual processes. By automating data quality processes, financial firms can ensure that they work with accurate, reliable data, leading to better decision-making.

Challenges include data accuracy, validation, and the ability to manage dark data (unmonitored and unvalidated data). Many firms struggle to monitor the vast amounts of data they generate, leading to potential risks in decision-making. Implementing automated solutions can help mitigate these issues.

DataBuck automates the creation of data quality validation rules, ensuring data completeness, conformity, and uniqueness. This tool streamlines data management processes, improves data accuracy, and helps firms maintain reliable data for better decision-making.

Firms should assess their existing data infrastructure, identify areas where data quality improvements are needed, and choose solutions that integrate seamlessly with their operations. Additionally, investing in automation tools like DataBuck can significantly enhance their data management capabilities.

Discover How Fortune 500 Companies Use DataBuck to Cut Data Validation Costs by 50%

Recent Posts

Get Started!