Angsuman Dutta

CTO, FirstEigen

Data Errors Are Costing Financial Services Millions and How Automation Can Save the Day?

Data quality issues continue to plague financial services organizations, resulting in costly fines, operational inefficiencies, and damage to reputations. Even industry leaders like Charles Schwab and Citibank have been severely impacted by poor data management, revealing the urgent need for more effective data quality processes across the sector.

Key Examples of Data Quality Failures:

- Charles Schwab & TD Ameritrade Acquisition: Since acquiring TD Ameritrade, Schwab users have reported multiple data errors, such as login issues, balance discrepancies, and inaccurate stock data. These problems point to fundamental gaps in Schwab’s data quality processes, causing frustration among users and concerns about data management robustness.

- Citibank: Citibank has faced numerous regulatory penalties due to its data governance failures. In 2020, the Office of the Comptroller of the Currency (OCC) fined the bank $400 million for inadequate data governance, internal controls, and risk management. Despite efforts to address these deficiencies, additional fines of $136 million were imposed in 2024 for insufficient progress, especially regarding regulatory reporting.

These incidents underline a critical issue: financial services organizations continue to struggle with data quality management, despite significant investments.

The Real Cost of Bad Data

The financial losses from poor data quality are immense. According to BaseCap Analytics, bad data can cause businesses to lose up to 15% of their revenue. In the financial services industry, where precision is crucial, the cost of bad data extends beyond just financial loss—it impacts decision-making, customer trust, and compliance with regulatory requirements.

The Misstep of Focusing on Dashboards and KPIs

Many organizations make the mistake of focusing heavily on creating dashboards and tracking numerous Key Performance Indicators (KPIs), believing that this will solve their data challenges. While dashboards can be useful, they often provide only superficial visibility into data health. The real question businesses should be asking is:

- Are data risks being adequately addressed?: Do current data systems account for detecting unanticipated errors, or are they just catching what is already known?

- Is there adequate coverage of critical data assets?: Are organizations validating all important data pipelines and assets, or is only a fraction of the data being properly monitored and validated?

Focusing solely on metrics or dashboard views without ensuring comprehensive data quality coverage and risk management can create a false sense of security. True data observability involves proactive monitoring for unexpected errors and ensuring all critical data flows are safeguarded.

Root Causes of Data Quality Failures

- Data Deluge and Outdated Processes: With the rise of cloud computing, big data, and analytics, the sheer volume of data that needs to be managed has exploded. While modern engineering teams can rapidly onboard new data assets using agile and CI/CD methodologies, data quality processes often lag, using outdated methods. As a result, errors slip through undetected, leading to downstream issues.

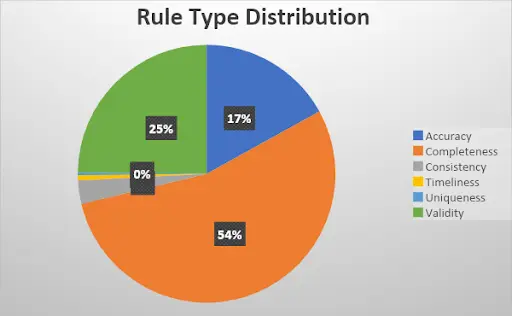

- Superficial Data Quality Programs: Many organizations focus on detecting minor issues, such as null values, while critical errors are overlooked. Audits show that 40-60% of data quality checks target basic problems that rarely occur in modern systems. This creates a false sense of security and leaves organizations vulnerable to more significant, undetected errors.

- Siloed Data Quality Programs: Data quality efforts are often fragmented across departments, making it difficult to identify and address errors that span multiple systems or pipelines. A lack of integration prevents a holistic view of data health.

- Limited Business Involvement: Data quality teams often operate in isolation, with little input from business stakeholders. As a result, business teams may have little visibility into the types of data quality checks in place or the effectiveness of those checks. This lack of oversight can lead to major issues going unnoticed until they become critical.

The Path Forward: a Comprehensive Solution Framework

To avoid the high costs of bad data, financial services organizations need to adopt advanced, proactive approaches to data quality management. Rather than focusing on dashboards and KPIs, businesses should ensure that their data quality systems address the most critical questions:

- Is there adequate coverage of all critical data assets and pipelines?: Organizations must ensure that all critical data is being monitored and validated, not just a fraction of their data assets.

- Does the current system detect unanticipated errors?: A robust data quality solution must be capable of identifying unknown or unexpected data errors, not just those that are already anticipated.

A Modern Data Quality Solution Should Also Meet the Following Key Criteria

- Machine Learning Enabled: Leverage AI/ML to detect errors in data related to freshness, completeness, consistency, and drift.

- Autonomous: Automate the process of establishing and updating validation checks for new or changing data sources.

- Support for Diverse Data Formats: Handle multiple data formats, such as Parquet, JSON, and CSV, without complex transformations.

- Scalability: Ensure that the solution scales with the organization’s data platforms.

- In-Situ Validation: Validate data at the source to reduce latency and avoid security risks.

- Serverless Architecture: Utilize serverless infrastructure to ensure cost-effective scalability.

- Seamless Integration: Integrate with existing data pipelines, enabling real-time validation and monitoring.

- Business Stakeholder Control: Provide business users with the ability to modify data quality rules without relying on technical support.

By addressing these core areas, financial services organizations can significantly reduce the risks associated with poor data quality, improve operational efficiency, and ensure compliance with regulatory requirements.

References:

Discover How Fortune 500 Companies Use DataBuck to Cut Data Validation Costs by 50%

Recent Posts

Get Started!