Overcome the Limitations of AWS Deequ with AI/ML

Data Trust Score Without Coding

Scalable

Set up 1,000 data assets in less than 40 hours

Fast

Validate 100 million records in 60 seconds

Better

Look for 14 types

of data errors

Economical

Validate 10,000 Data Assets in less than $50

Secure

No Data leaves your Data Platform

Integrable

Data Pipeline Data Governance Alert System Ticketing System

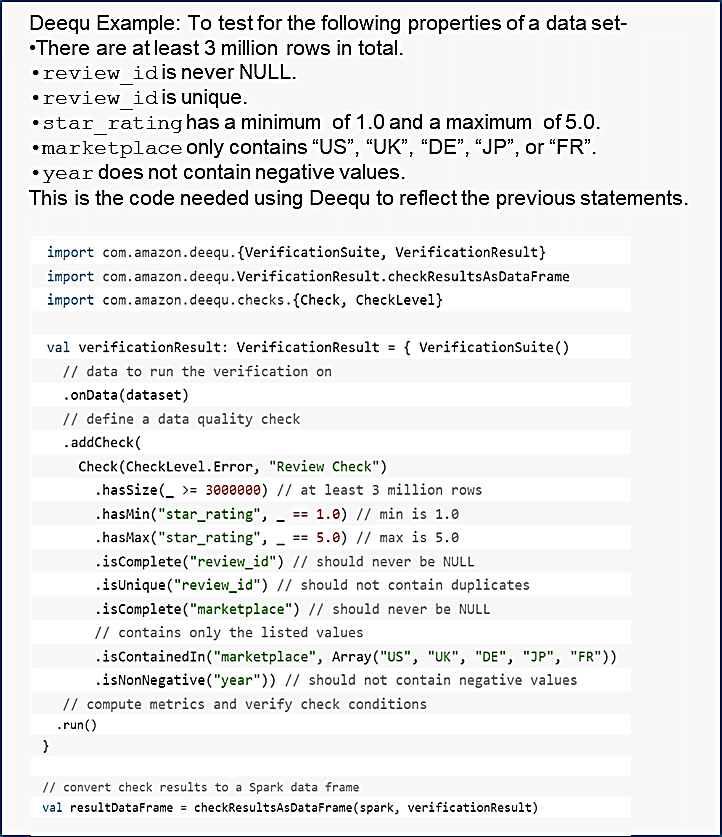

Problems with AWS Deequ

Solutions like Deequ, Griffin, and Great Expectations rely on a rule-based approach to validate AWS S3. Data Engineering team experiences the following operational challenges while integrating data validation solutions:

Labor Intensive

- Every dataset’s underlying behavior has to be deeply analyzed and understood.

- Consult the subject matter experts to determine what rules need to be implemented.

- Must implement rules specific to each bucket. So, the effort is linearly proportional to the number of buckets in the S3 Data Lake

Incomplete Rules Coverage

- Users must predict everything that could go wrong and write rules for it.

- The quality of rules coverage is non-standard and user-dependent.

Lack of Auditability

- It’s challenging for the business to go back a week or a month and review past results or compare them to the present.

Not Scalable for 1,000’s of data assets

Our 10-Point Framework to Overcome the Limitation for Deequ

- Machine Learning Enabled

- Autonomous

- Data Variety Support

- Scalability

- In-Situ

- Serverless

- Part of the Data Validation Pipeline

- Integration and Open API

- Audit Trail/Visibility of Results

- Business Stakeholder Control

Solution: DataBuck - Trusted Data w/o Coding

DataBuck is a scalable solution that can deliver trusted data for tens of 1,000’s of datasets leveraging AI/ML to autonomously track data and flag data errors. It automatically detects 100% of all Data errors due to “Systems Risks” with zero human intervention using AI/ML. Errors creep in the data pipeline and steadily multiply like cancer across an enterprise. For every step the error spreads, it takes 10x more cost and effort to fix it. User’s benefit by:

- Trustable reports, analytics & models

- Lower data maintenance work & cost

- 10x efficient in scaling Data Quality operations

DataBuck is recognized by Gartner and IDC as the most innovative DQ validation software.

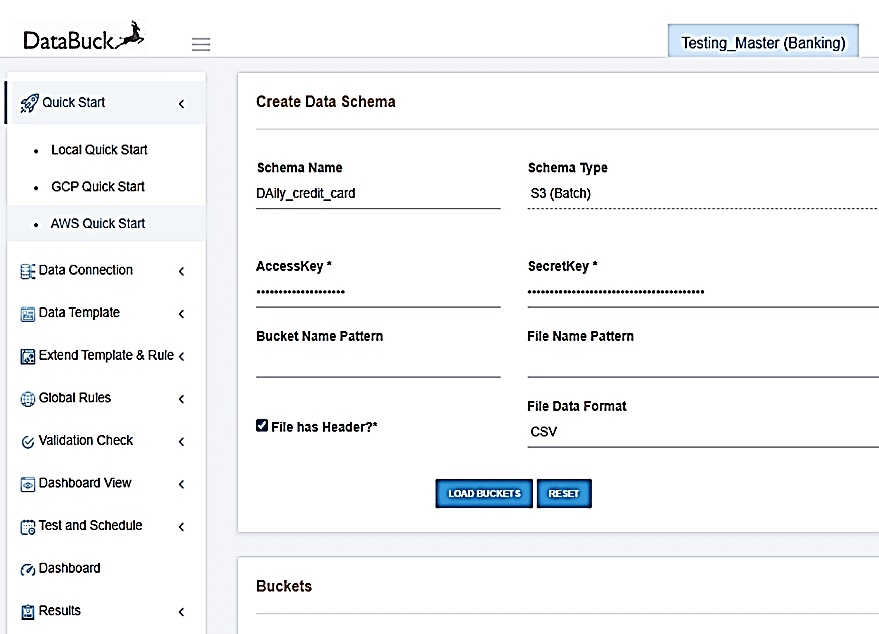

Set up data quality validation for 1,000's of buckets in just a few clicks with DataBuck

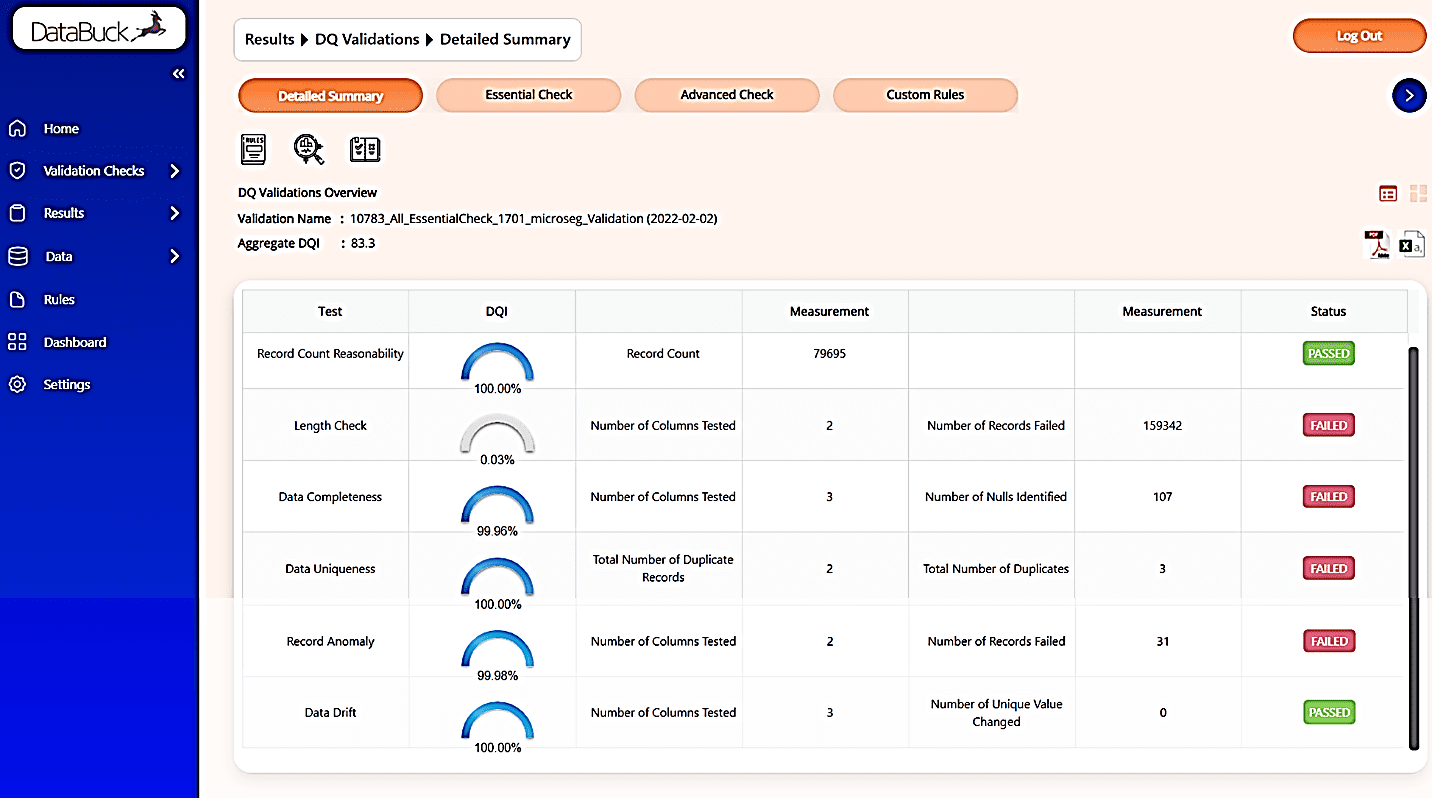

Out-of-the-box dash board can slice and dice data quality validation results and its easy to audit

Benefit of automating data quality validation on AWS

Get consumable, crystal clear stream of data from AWS along with these benefits…

People productivity

boost >80%

Reduction in unexpected errors: 70%

Cost reduction >50%

Time reduction to onboard data set ~90%

Increase in processing speed >10x

Cloud native

Introduction Data Quality Monitoring- Why it's important?

FirstEigen recognized in AWS re:Invent as best-of-breed DQ tool

Autonomous cloud Data Quality validation demo with DataBuck

Friday Open House

Our development team will be available every Friday from 12:00 - 1:00 PM PT/3:00 - 4:00 PM ET. Drop by and say "Hi" to us! Click the button below for the Zoom Link: