What is Data Monitoring and Why Do You Need It?

Organizations leverage information to make decisions that affect people and revenue. Data monitoring plays a role by ensuring that any data submitted is reliable and meets the criteria of an organization. It keeps checking the data to verify that it still holds to the rules of a business, even if those rules change. Today, 88% of companies use market research to make business decisions. For businesses, understanding data monitoring translates to maintaining reliable and valuable information.

Read on to better understand how data monitoring works and why you need it.

Key takeaways:

● Data monitoring is a process that continually checks provided information for quality and conformity based on an organization’s standards.

● The most significant benefit from data monitoring is that business decisions result from accurate and relevant information.

● Data monitoring addresses at least four aspects of data quality: completeness, conformity, uniqueness, and accuracy.

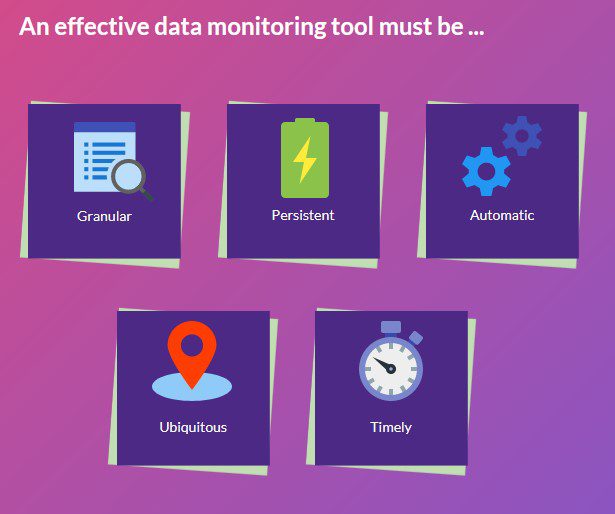

● Five characteristics of an effective data monitoring solution are granularity, ubiquity, automation, persistence, and timeliness.

An Intro to Data Monitoring

Data monitoring is a process that continually checks provided information for quality and conformity based on an organization’s standards.

What kind of data do businesses check? The applications are many, but here are some examples:

● Account and transactional data at banks

● Sales performance at Pharmaceutical companies

● Patient records from a health care provider

● IoT data from sensors that monitor 1000’s of locations and equipment

● Global employee records for companies

● Rebate tracking and validation

● Validating financial data reported to Wall Street

Why is Data Monitoring Important?

The most significant benefit from data monitoring is that business decisions result from accurate and relevant information. Should a company invest in a particular product? Is a pharmacy chain deciding whether to close some of its stores? Should a business shift its advertising focus to a specific demographic? Since these decisions impact the company and people, reliable data is vital.

In addition, modern data monitoring tools have the added advantage of automation. There is no need for a worker to manually verify the data, thus freeing them for other tasks. Overall, automated data monitoring boosts worker performance, reduces errors, and reduces operational costs.

4 Aspects of Data Quality That Data Monitoring Evaluates

Next, let’s dig a little deeper into the inner workings of data monitoring. An effective data monitoring solution will address four aspects of data quality, though not limited to these. An administrator can configure the monitoring tool to either alert or discard the data.

1. Completeness

When receiving data, a fundamental check is if the information is complete. For example, here is an entry from a subscribers’ list:

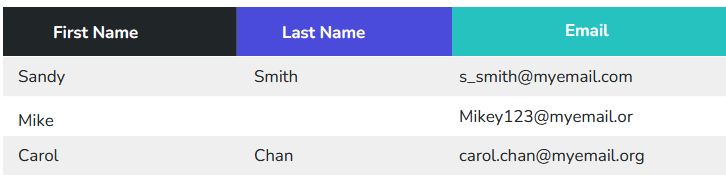

The information provided by Sandy and Carol looks correct. However, there are a few issues with Mike’s data. First, the “Last Name” column is blank. Second, a letter is missing at the end of Mike’s email address. These are both examples of incomplete data.

Another example of incomplete data is when a field has a default value. Say a user living in New Jersey fills out their address information but leaves the state with the default value “AL” (Alabama). This discrepancy will also need addressing.

2. Conformity

The data must conform to the standards or rules set by the organization. This conformity in the records relates to data governance, which is especially crucial for industries that need to meet governmental regulations (e.g., healthcare, airline). People in an organization define the criteria and guidelines that the data must meet. Next, they set the processes for the lifecycle of the data.

Examples of rules for data include:

● Currency – Is the field using American dollars, Euros, or something else? All the data should conform to and be using the same currency.

● Number format – Are the values for a field supposed to be integer or decimal? How many decimal places should there be for the decimal format? Seemingly simple, but when an organization deals with 1000’s of tables and 100’s of column, tracking each column constantly is enormously laborious and expensive.

3. Uniqueness

Uniqueness means that there should not be both, duplicate data sets and duplicate records within data sets. Duplicates could represent all values in a row or matches in a particular field. The organization determines the level of uniqueness for a specific data set. Companies that deal with credit card transactions have heightened sense of concern for this issue.

4. Accuracy

Here are examples of inaccurate data:

● Values are in the incorrect column. For instance, the “First Name” field contains the person’s last name and vice versa.

● The data contains a misspelling. An example is the value “Gren” for favorite color.

● The field contains a junk value. A scenario: a user doesn’t wish to provide an email address, so they fill the area with a random set of special characters, letters, and numbers (e.g., “#$345844^^9@O@)_$LF”.)

● There exists invalid data. Suppose there is a field for the number of teddy bears in stock. Valid values are 100, ten, and zero. Invalid data is 2.5 and -15.

https://www.youtube.com/watch?v=N9olq42z-AE

Characteristics of a Data Monitoring Tool

For a data monitoring solution to deliver the maximum business benefits, it should have the following characteristics:

● Granularity – The solution must dig into the fine details of the standards and the data. It ensures accurate monitoring.

● Persistence – The checks must be continuous in time. It cannot be just at a single moment in time. Continuous monitoring with a reference to history allows for early detection in data drift and deviation from expected behavior.

● Automation – Automating the monitoring process frees up personnel and minimizes mistakes. New data monitoring technology leverages artificial intelligence (AI) and machine learning (ML) to understand data quality.

● Ubiquity – Businesses can apply the monitoring tool anywhere, whether it’s on-prem or in the cloud.

● Timeliness – The tool checks to ensure the data being propagated throughout the organization is the latest and freshest. The system also sends out real-time notifications when there is deviation from expected behavior.

Automate Data Monitoring with FirstEigen

Data monitoring is a blind spot for many companies. The best companies today only monitor less than five percent of their data. The other 95% is dark – unmonitored, unvalidated, unreliable. With the tremendous growth in microservices, this has become a logistical nightmare.

FirstEigen can help companies alleviate that pain point. DataBuck is an automated data monitoring and validation software that leverages AI/ML. It’s serverless and no-code feature simplifies data quality.

Would you like to know more? Contact us today!

Check out these articles on Data Trustability, Observability, and Data Quality.

- 6 Key Data Quality Metrics You Should Be Tracking (https://firsteigen.com/blog/6-key-data-quality-metrics-you-should-be-tracking/)

- How to Scale Your Data Quality Operations with AI and ML (https://firsteigen.com/blog/how-to-scale-your-data-quality-operations-with-ai-and-ml/)

- 12 Things You Can Do to Improve Data Quality (https://firsteigen.com/blog/12-things-you-can-do-to-improve-data-quality/)

- How to Ensure Data Integrity During Cloud Migrations (https://firsteigen.com/blog/how-to-ensure-data-integrity-during-cloud-migrations/)